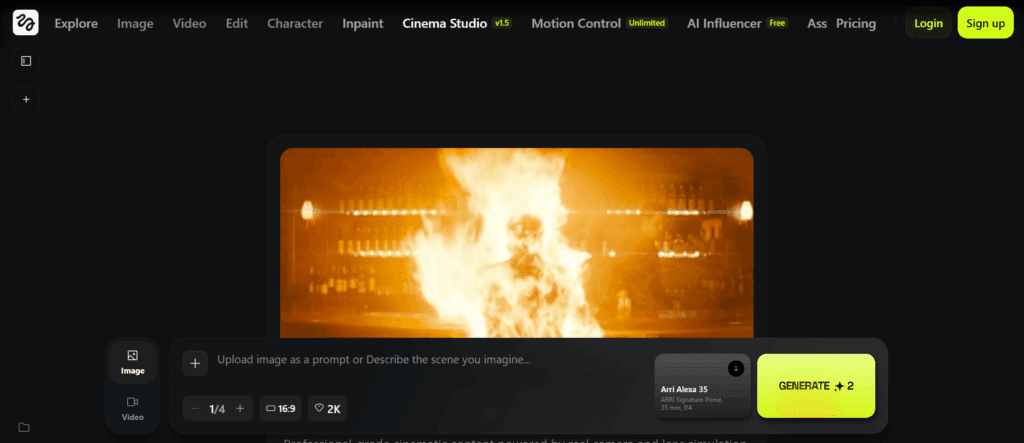

ComfyUI Wan2.2: 100% Free & Unlimited AI Video Generator

AI video tools are improving fast. Many are still locked behind credits, limits, or subscriptions.

ComfyUI Wan2.2 is different.

It is free, local, and unlimited. You control the workflow, the models, and the output.

When paired with Z-Image, it becomes a powerful system for high-quality AI video creation.

This guide explains what Wan2.2 is, how it works, and how to get the most value from it, without making the article long or hard to read.

What Is ComfyUI Wan2.2?

ComfyUI is a node-based interface for generative AI.

Instead of clicking buttons, you connect nodes. Each node does one job.

Wan2.2 is a video diffusion model that runs inside ComfyUI.

It supports text-to-video and image-to-video generation.

Because everything runs locally, there are:

- No daily limits

- No watermarks

- Also, no locked features

Your GPU is the only limit.

Wan2.2 Essentials (What You Actually Need)

Wan2.2 works with a few core components:

- The diffusion model (5B or larger variants)

- A text encoder

- A VAE for decoding frames

- Video latent and scheduler nodes

ComfyUI now ships official Wan2.2 templates, so setup is easier than before.

Is Wan2.2 Really 100% Free?

Yes, but with context.

Wan2.2 is open source. You can use it for personal or commercial work.

There are no usage caps.

The only costs are:

- Your hardware

- Or optional cloud GPUs

This is why many creators are moving from paid AI video tools to ComfyUI-based workflows.

Wan2.2 Models, Precision, and Speed

Wan2.2 has several technical options that affect speed and quality.

BF16 vs FP8

This matters more than many people think.

BF16

- Better stability

- Cleaner motion

- Higher VRAM use

FP8

- Faster generation

- Lower VRAM use

- Slight quality trade-offs

If your GPU has enough memory, BF16 is safer.

FP8 is useful on smaller cards.

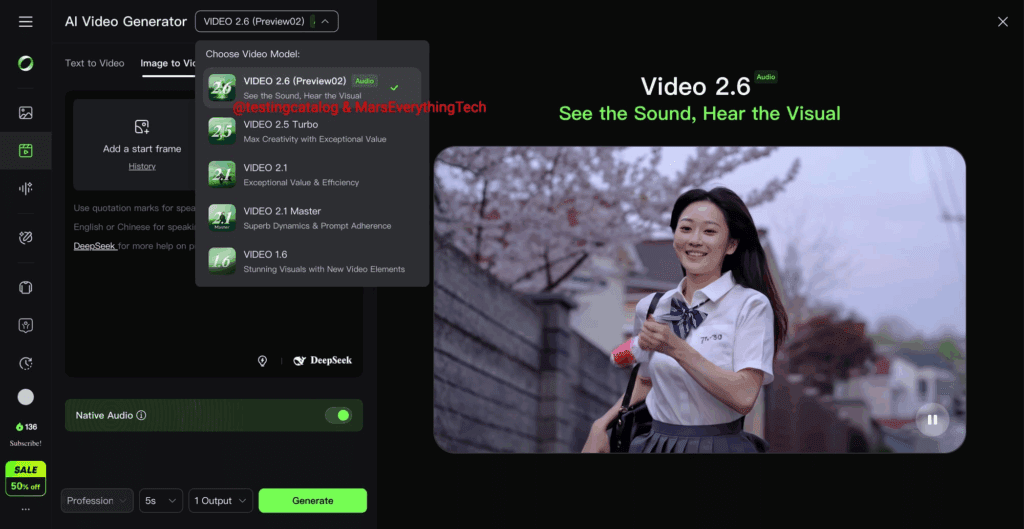

Turbo 2.0 vs Turbo 1.0

Turbo models focus on speed.

The turbo 2.0 improves:

- Motion consistency

- Temporal stability

- Overall visual clarity

For most users, Turbo 2.0 is the better default, especially for short videos.

Text-to-Video and Image-to-Video

Wan2.2 supports two main workflows.

Text-to-Video

You write a prompt. The model generates motion from scratch.

This is good for abstract or experimental scenes.

Image-to-Video (Image-to-Image based)

You start from a still image. Wan2.2 adds motion.

This produces more stable and cinematic results.

For marketing, storytelling, and short-form video, Image-to-Video is usually better.

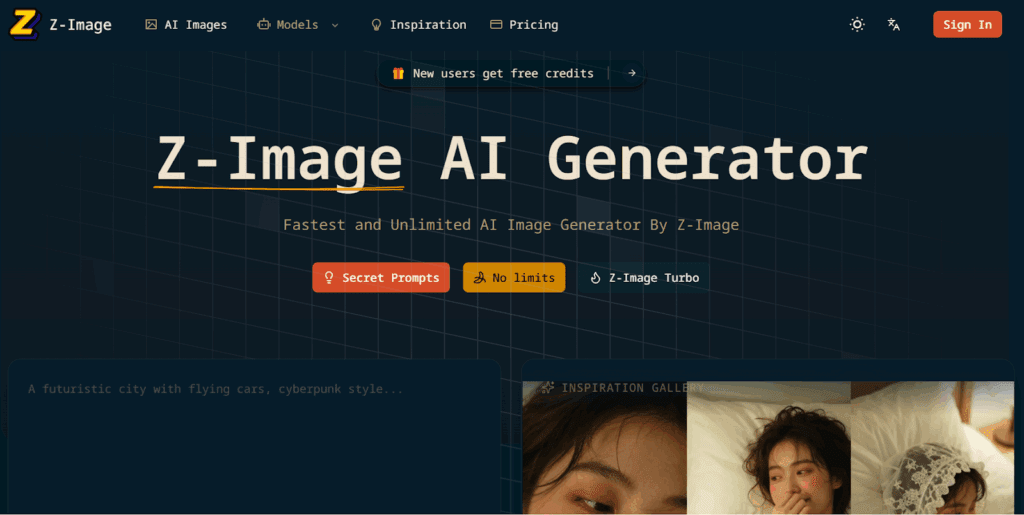

Z-Image: Why It Matters

Z-Image is a fast text-to-image model that runs inside ComfyUI.

It is not a video model. Its role is different.

Z-Image is best used to:

- Generate clean keyframes

- Lock composition and lighting

- Reduce visual drift in video

Many creators now use this flow:

Z-Image → Wan2.2 Image-to-Video

This approach saves time and improves consistency.

Z-Image Turbo 2.0 vs 1.0

Turbo 2.0 is a meaningful update.

It offers:

- Faster inference

- Better detail retention

- More reliable structure for animation

If you plan to animate images with Wan2.2, Turbo 2.0 is the better choice.

Training a Z-Image LoRA in ComfyUI

This is where real advantage appears.

A Z-Image LoRA lets you train:

- A brand style

- A character

- A product looks

You do not need thousands of images.

When LoRA Training Makes Sense

Train a Z-Image LoRA if you need:

- Consistent faces or products

- Reusable visual identity

- Better control before animation

How Z-Image LoRA Training Works (Short Version)

- Prepare 20–60 clean images

- Caption them clearly

- Use a LoRA training workflow in ComfyUI

- Train on the Z-Image base

- Apply the LoRA during image generation

Once trained, you generate images with the LoRA.

Then you pass those images into Wan2.2 for animation.

This keeps videos consistent across scenes.

Z-Image vs Nano Banana Pro

Both tools generate images. They serve different goals.

Z-Image

- Very fast

- Strong realism

- Supports LoRA training

- Works well with Wan2.2 pipelines

Nano Banana Pro

- Good out-of-box visuals

- Limited customization

- Not ideal for video prep

If your goal is AI video, Z-Image is the better option.

It fits naturally into ComfyUI workflows.

Real Use Cases for Creators

Wan2.2 and Z-Image work well for:

- Short-form social videos

- Product visuals with motion

- AI b-roll

- Music and visual loops

For platforms like VidAU, this setup helps create repeatable, scalable video content without usage limits.

Conclusion

ComfyUI Wan2.2 proves that powerful AI video tools do not need subscriptions.

When combined with Z-Image, it becomes a flexible and future-proof system.

If you want control, scale, and freedom, this workflow is hard to beat.

FAQs

1. Is ComfyUI Wan2.2 really unlimited?

Yes. There are no built-in limits. You can generate as much as your hardware allows. This is why it is often called “unlimited.”

2. Can I use Wan2.2 videos commercially?

Yes. Wan2.2 is open source. You can use the outputs for commercial projects. Always check local laws and platform rules.

3. Do I need Z-Image to use Wan2.2?

No. Wan2.2 works on its own. Z-Image simply improves image-to-video quality and consistency.

4. Is training a Z-Image LoRA difficult?

Not anymore. With modern ComfyUI workflows, it is manageable. The main work is preparing clean images and captions.

5. Which GPU is recommended?

An RTX 4090 is ideal. However, smaller GPUs can still work using FP8 and Turbo models.