A/B vs Bandits vs Multivariate: Choose the Right Method

Choosing the right testing method isn’t a stats exercise alone; it’s a strategy call that blends business goals, traffic realities, creative throughput, and risk tolerance. In 2025, mature teams mix A/B testing, A/B/n, Multivariate (MVT), and Multi-Armed Bandits and complement them with modern analysis tools like CUPED (variance reduction) and sequential testing (peek-safe monitoring). A practical constraint often gets overlooked: you can’t test what you don’t have. That’s why this guide weaves in VidAU’s Remix Video Ads feature, so your creative bench keeps up with your experimentation ambitions. Below, we lay a clear foundation, then map each method to when and how to use it, complete with a side-by-side comparison and a detailed decision tree.

What Is A/B Testing?

A/B testing (also called split testing) is a fair race between two versions: A (current) and B (new). We randomly show people one version and track one main metric (for example, purchases). If B beats A by enough, we ship B.

Planning the essentials

Write a clear hypothesis (“Changing the first 3 seconds of the video will increase purchases because it grabs attention”). Pick one main metric and a few guardrails (things you don’t want to harm, like bounce rate or app crashes). Decide how long the test should run based on your traffic and the smallest improvement that matters to you.

Power, effect size, and run time (in plain words)

- Effect size (MDE): the smallest win worth caring about (e.g., +3% purchases).

- Power: your chance to spot that win if it’s real (aim for 80–90%).

- Alpha: your tolerance for false alarms (often 5%).

Bigger traffic or a bigger effect means shorter tests. Less traffic or tiny effects mean longer tests.

A/B vs A/B/n vs Multivariate vs Bandits

You have four common choices. The right one depends on your goal, your time, and your traffic. Here’s the simple picture first, then we explain how to choose.

Comparison Table: What They Are, How They’re Used, When They Shine

| Dimension | A/B (and A/B/n) | Multivariate (MVT) | Multi-Armed Bandits |

| What it is | Randomised experiment comparing control vs one (A/B) or several challengers (A/B/n). | Experiment testing combinations of multiple elements (e.g., headline × image × CTA) to detect main effects & interactions. | Adaptive experiment that shifts traffic toward better variants while continuing to explore. |

| Plain-English meaning | “Which complete version wins?”. Best for decision-grade learning. | “Which combo of parts works best, and do parts interact?” | “Earn while you learn”—tilt spend to winners during the run. |

| How it’s used | Pre-specify primary metric/MDE; compute N; run to completion or use sequential rules. | Choose factors & levels; consider fractional factorials if traffic is limited; analyse main + interaction effects. | Choose reward (e.g., conversions/1000 impressions); algorithm reallocates traffic as evidence accrues. |

| Best for | Rollouts, pricing changes, and product UX where clean inference matters most. | Element-interaction learning and creative systems work. | Limited-time promos, finite budgets, and short horizons where in-flight ROI matters. |

| Traffic needs | Moderate (A/B is fastest to power); A/B/n slows as you add arms. | High (cells grow combinatorially unless fractional). | Moderate (optimises in-flight; post-hoc inference needs care). |

| Pros | Simple to reason about; strongest internal validity; audit-ready estimate with CI. | Reveals interactions; compresses multiple A/Bs into one structured study. | Improves outcomes during the experiment; graceful explore-exploit balance. |

| Cons | A/B/n dilutes traffic across arms; peeking temptation. | Can be slow/underpowered if traffic is thin; design & analysis are more involved. | Adaptive allocation can bias naive effect estimates; less “textbook-clean” inference. |

| Unique capability | Decision-grade lift for long-term choices. | Combinatorial insight (main + interaction effects) in one run. | Exploit while exploring, harvest wins early. |

| When to avoid | Ultra-short windows where you must harvest wins now (start with Bandit). | Low traffic or weak factor definitions (serialise A/Bs instead). | High-stakes or regulated decisions; confirm with fixed-horizon A/B. |

A More Detailed Decision Tree

This is a step-by-step way to pick a method without overthinking it.

Step 1: What’s your main goal: Learn or Earn?

- Learn: You want a trusted number to guide a big decision (new pricing, new flow). Pick A/B (or A/B/n if you have a few strong ideas). If time is tight, still use A/B but consider tools that make it faster (explained below).

- Earn: You’re on a short promo or tight budget and need results now. Start with a bandit so more traffic (and spend) goes to the early winner. Then run a quick A/B to confirm before you roll out everywhere.

Step 2: Do you need to know how parts work together?

If yes, and you have the traffic, run Multivariate (MVT). If your traffic is small, don’t force it; run a few A/B tests in a row instead.

Step 3: Is traffic small, or will people peek at results?

If traffic is small, keep it simple (A/B over A/B/n). If leaders will peek, don’t wing it, use a sequential plan (pre-agreed checkpoints) so peeking doesn’t break your stats.

Planning Your Experiment

Before you launch, write a one-page plan. It keeps everyone honest and saves time later.

Hypothesis, metric, and scope

State who you’re changing things for, what you’re changing, and why it should help. Pick one main metric and a few guardrails. Say who is in (countries, devices) and who is out (bots, staff).

Size and timing

Use a calculator (or your platform) to estimate how many people you need per version and how many days that will take with your traffic. If it’s too long, either (1) accept a bigger win as your goal, (2) test fewer versions, or (3) use the CUPED trick below to reduce noise.

Stopping rules and notes

Write when you’ll stop (at the planned sample or at set checkpoints). Promise not to change rules mid-test. Save notes, screenshots, and the final call; your future self will thank you.

Running Tests the Right Way

The mechanics matter. Small mistakes can ruin clean results.

Randomisation and logging

Keep people in one bucket for the whole test. Log who saw what and when. These logs help you spot problems and explain results.

QA, performance, and safe rollouts

Check that events fire, pages look right, and load times stay healthy. For risky changes, start with a small canary (5–10%), watch your guardrails, then ramp up.

Reporting

Share the effect size (e.g., “+3.2% purchases, 95% CI +1.1% to +5.4%”), not just a “p-value.” Say what you’ll do next and why.

Modern Stats That Help You Go Faster

You can move quickly and stay honest by using two simple ideas.

CUPED — less noise, shorter tests

CUPED uses people’s past behaviour (before the test) to reduce random noise. If past behaviour predicts future behaviour, you can adjust for it and need fewer people to see the same effect. Translation: tests finish sooner without cheating.

Sequential testing — safe peeking

If your team needs to look mid-test, plan checkpoints with clear “stop for win” or “stop for harm” rules. That way, you can stop early without increasing false alarms.

Quality Issues and Pitfalls

A few common traps cause bad reads. Here’s how to avoid them.

SRM — your traffic split looks wrong

If you planned 50/50 but you’re seeing 57/43 (with lots of users), pause. Something’s off (filtering after assignment, tracking drops, bots). Fix first; don’t trust the numbers until you do.

Peeking without a plan

Looking early in a normal A/B test raises false wins. If peeking is likely, switch to a sequential plan. Otherwise, wait until the planned end.

Novelty and seasonality

New designs can spike then fade. Weekdays act differently from weekends. Try to run through at least one full week and keep an eye on guardrails.

Where VidAU Fits (Remix Video Ads for Faster, Better Ad Tests)

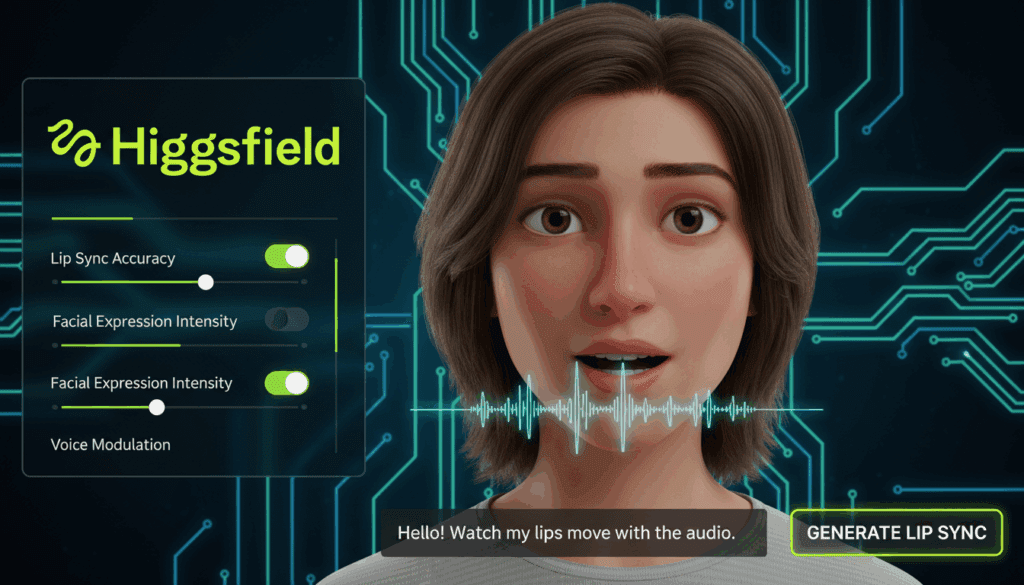

Ads move fast. Budgets are tight. You need strong variations now. VidAU – Remix Video Ads lets you create several distinct versions from one base video, different hooks, orders of scenes, captions/overlays, and lengths/aspect ratios (Reels, Shorts, TikTok, in-feed).

A simple four-step playbook

- Remix in VidAU: make 3–5 clearly different versions (not tiny tweaks).

- Run a bandit for week 1: the system sends more budget to winners while still learning.

- Confirm with A/B in week 2: winner vs control for a clean, trustworthy lift number.

- Scale and repeat: turn the winner into a recipe; keep one new challenger in play.

Conclusion

Method choice is about fit, not fashion. If you need decision-grade learning, run a well-powered A/B, tighten variance with CUPED, and, if necessary, use sequential rules to peek safely. If you must earn while you learn, start with a Bandit and then confirm with A/B.

Finally, remove the creative bottleneck so your method fits your moment. With VidAU – Remix Video Ads, you can generate a deeper bench of strong, on-brand variants quickly, run bandit → A/B loops during short promo windows, and keep one always-on challenger in play. That’s how you ship bolder ideas faster, maintain statistical integrity, and compound growth across quarters.

Frequently Asked Questions

1. What is A/B testing?

A fair test where people see either version A or B at random. We track one main goal (like purchases) and keep the version that truly does better.

2. When should I use A/B, Multivariate, or Bandits?

- A/B: When you need a trusted answer for a rollout.

- Multivariate: When you need to know which combination of parts works best (and you have enough traffic).

- Bandits: When you must improve results during the test (short promos), then confirm with A/B.

3. How long should a test run?

It depends on your traffic and the smallest win you care about. More traffic or bigger wins = shorter tests. Use a calculator to turn “people needed” into “days.”

4. Can I check results mid-test?

Yes, if you set checkpoints and rules before you start (that’s sequential testing). Random peeking in a normal A/B test can create false wins.

5. How does VidAU help?

VidAU – Remix Video Ads lets you quickly make strong, different video versions from what you already have. That means you can run better tests sooner: remix → bandit to improve results now → A/B to confirm → scale.