AI 3D Model Generator: Top Generators Compared (Sam D, Grok 4.1, GPT-Codex-max, Hunyuan3D, and Gemini 3)

You want 3D assets fast without losing control of quality. AI 3D model generator tools help you move from text or images to meshes and scenes in a few steps. In this guide you see how Sam D, Grok 4.1, GPT-Codex-max, Hunyuan3D, and Gemini 3 fit into a real 3D pipeline, and how to choose the right stack for your work.

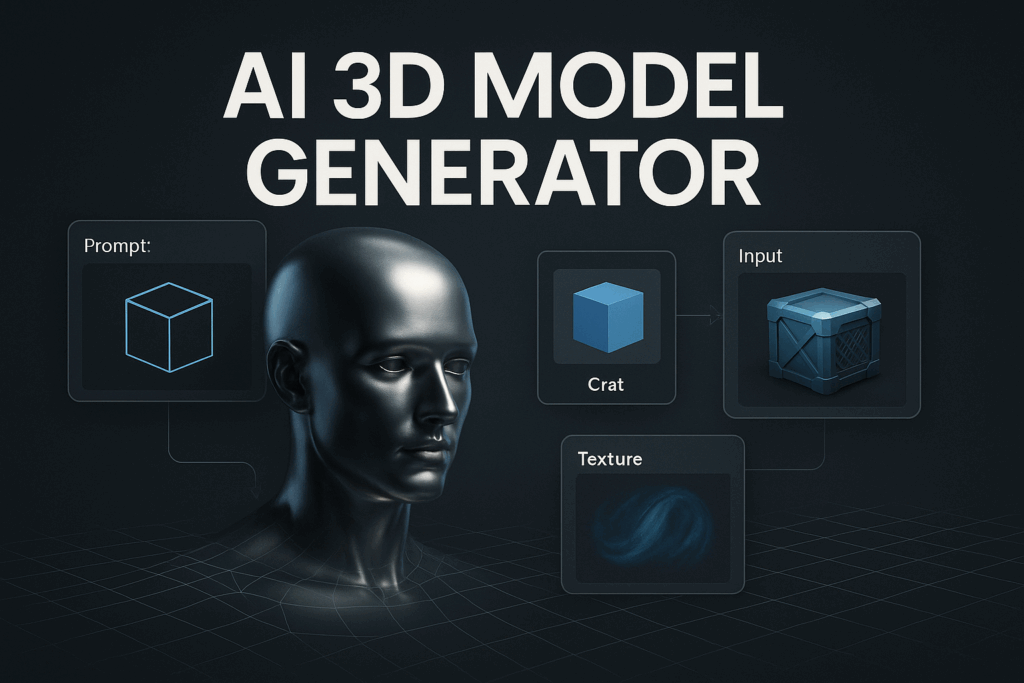

What is an AI 3D Model Generator and How Does it Work?

An AI 3D model generator turns text or images into a 3D mesh with textures that you export into tools like Blender, Unity, Unreal, or a web viewer.

At a basic level, you give the model an input. That input might be a prompt, a single photo, a set of photos, or simple shapes. The model predicts the 3D shape, then adds materials, colors, and details on the surface.

Most tools follow a simple flow.

• Input stage

You type a text prompt such as “low poly sci-fi crate in blue”.

Or you upload a product shot, a toy, or a real object from different angles.

• Shape stage

The model predicts geometry in 3D space.

It builds a rough mesh that matches the outline and mass of your subject.

• Texture stage

The system then paints the surface.

It adds color, roughness, metal effects, logos, or other details.

• Export stage

You send the result to OBJ, FBX, GLB, USDZ, or similar formats.

You then bring the file into your engine or DCC tool for clean-up.

AI sits inside a larger pipeline. You still plan concepts, set art direction, review meshes, tweak textures, set lights, and render final images or videos. The AI layer speeds up the middle steps.

Who Should Use AI 3D Model Generators Today?

AI 3D model generators suit teams and solo creators who need more output from limited 3D time and budget.

Different groups use these tools in different ways.

• Indie game developers

They need lots of props and background items.

AI helps them build crates, rocks, furniture, and filler items for levels.

They still refine hero assets by hand.

• Ecommerce and marketing teams

They want 3D product views, spin shots, and short clips.

AI helps them turn product photos into simple 3D versions for web viewers and ads.

They then render turntables and pack them into product videos.

• XR and training studios

They often need training objects, scenes, and digital twins.

AI gives them draft meshes for tools, machines, or rooms.

Artists then adjust scale and details to match field reality.

• Education and hobbyists

Students and hobby artists learn faster with AI support.

They run prompts, inspect results, and then study how topology and UVs work.

In each group, human artists still set style, review output, and protect quality.

How do Sam D, Grok 4.1, GPT-Codex-Max, Hunyuan3D, and Gemini 3 fit into 3D workflows?

Sam D and Hunyuan3D focus on mesh creation, while Grok 4.1, GPT-Codex-max, and Gemini 3 act as brains for planning, code, and automation across your 3D pipeline.

Here is a quick view of each model.

• Sam D

Role: image to object-level 3D

Strength: tight link to real images

Best for: props and objects from photos

Main gap: more research than polished SaaS in most setups

• Hunyuan3D

Role: text and image to full 3D assets

Strength: strong texture detail from prompts

Best for: concept props, characters, stylized assets

Main gap: meshes still need retopo and manual tweaks

• Grok 4.1

Role: reasoning engine and agent brain

Strength: strong tool use and long chains of steps

Best for: scripts, glue code, pipeline planning

Main gap: no direct mesh output

• GPT-Codex-max

Role: code-first engine for complex 3D tools

Strength: long code edits, tests, and maintenance

Best for: Blender scripts, engine tools, batch jobs

Main gap: relies on external 3D generators

• Gemini 3

Role: multi-modal co-pilot for ideas and docs

Strength: deep reasoning across text, images, and video

Best for: briefs, feedback, prompt libraries, cloud flows

Main gap: no public text-to-3D model in the main product line

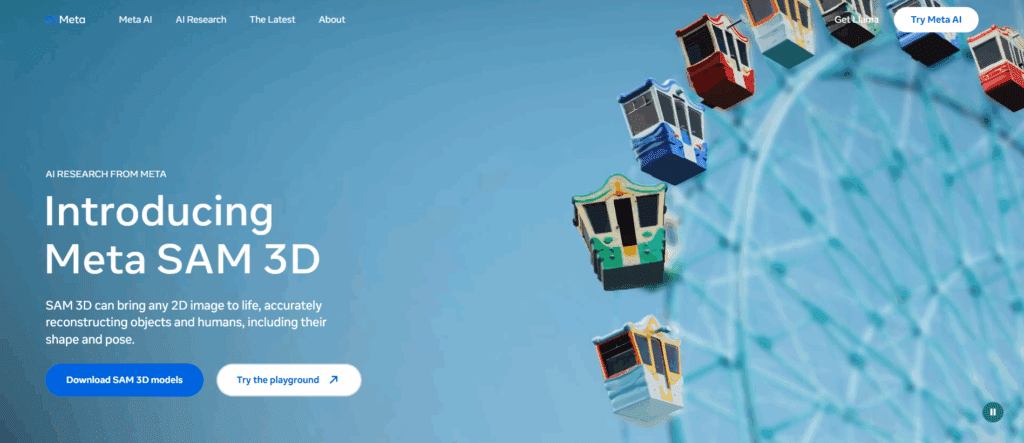

Sam D – Box-to-3D From Images

Sam D sits close to Meta’s work on segmentation and 3D from images. Think of it as a bridge from 2D pixels to 3D shapes.

You draw boxes or use masks over objects in an image. The model then turns those regions into 3D geometry. This helps with objects in scenes, medical scans, or industrial items.

Sam D suits teams with strong image data. You get geometry that lines up with real photos. You then send that mesh to Blender or another tool for clean-up and texturing.

The main limits stay clear. Source images need good lighting and angles. Output often needs manual work on topology, holes, and surfaces. Right now you treat Sam D as a smart helper, not a full product by itself.

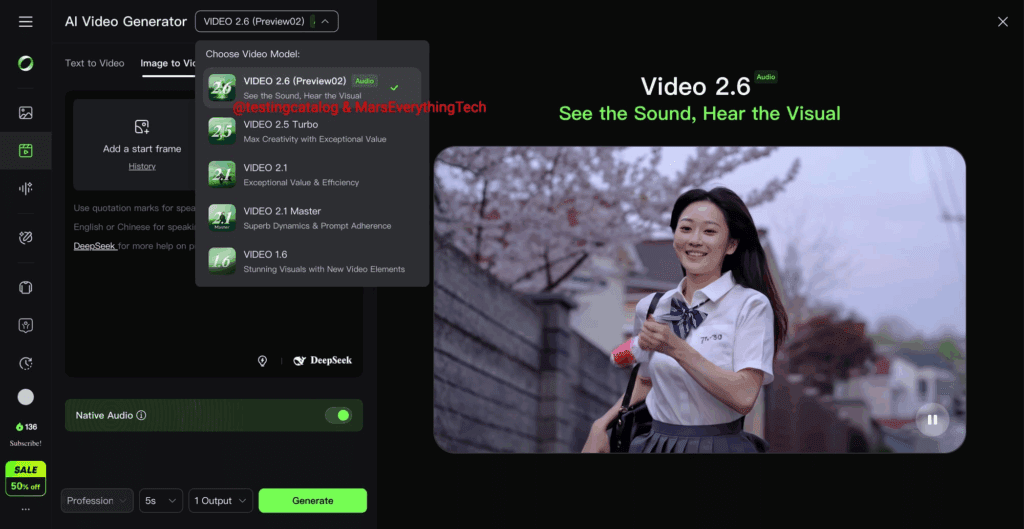

Hunyuan3D – Text and Image to Full 3D Assets

Hunyuan3D comes from Tencent’s Hunyuan work. It turns prompts and images into full 3D assets with textures.

You type prompts such as “realistic wooden chair in Nordic style” or “stylized fantasy sword”. You also upload 2D art or product photos. The model predicts shape first, then textures.

Hunyuan3D fits teams that want fast concept assets. Game devs use it for props and weapons. Product teams test simple 3D ideas for viewers. Hobby artists explore style ideas without deep mesh skills.

You still fix things by hand. Topology often needs work. UV layouts need clean-up for high end projects. For many tasks, this tradeoff makes sense because you reach a usable base asset in minutes.

Grok 4.1 – Agent for 3D Workflows and Research

Grok 4.1 from xAI works more like a control tower. It handles reasoning, tool use, and long thought chains.

For 3D work, Grok 4.1 helps in three main ways.

• Pipeline design

You describe your current 3D steps.

Grok proposes a learner flow and points out jobs suited to AI.

• Agent planning

You connect 3D tools, file stores, and AI generators.

Grok designs agents that call generators, move files, and log runs.

• Research and prompts

You ask for prompt sets, style guides, or code patterns for 3D tools.

Grok shares examples and explains tradeoffs.

Grok 4.1 does not output meshes. It drives the system that calls Hunyuan3D, Sam D, or other engines. That still gives strong leverage, because most 3D work comes from process, not one-off runs.

GPT-Codex-Max – code-first 3D pipeline builder

GPT-Codex-max focuses on code, not direct media. It writes, edits, and maintains complex scripts over long sessions.

For 3D work, this matters a lot. Modern 3D teams spend a heavy time on pipeline tools, not only on art.

Here is where GPT-Codex-max helps.

• Blender and Maya scripts

It builds tools that place objects, fix normals, batch import assets, and run checks.

• Engine helpers

It writes Unity or Unreal editor tools that build scenes, set LODs, and prepare assets for platforms.

• Batch jobs and CI

It scripts jobs that scan assets, rename files, convert formats, and run tests on scenes.

You pair GPT-Codex-max with Hunyuan3D and Sam D. Those engines feed meshes. GPT-Codex-max keeps the code around those meshes in good shape.

Gemini 3 – Multi-Modal Co-Pilot for 3D Scenes and Docs

Gemini 3 from Google supports text, images, and video. It focuses on reasoning, code, and deep context inside the Google stack.

For 3D teams, Gemini 3 often fills support roles.

• Briefs and art direction

You share mood boards and rough ideas.

Gemini 3 writes clear briefs and prompt packs for Hunyuan3D or other tools.

• Feedback and reviews

You send renders or screenshots.

Gemini 3 helps you write feedback notes and next steps for artists.

• Docs and training

It writes pipeline docs, code comments, and internal guides.

New hires ramp faster, and scripts stay easier to maintain.

You then wire Gemini 3 into Google Cloud, AI Studio, or Vertex AI flows with other 3D services.

How Do These Models Compare on Quality, Speed, and Control?

Sam D and Hunyuan3D focus on geometry and texture, while Grok 4.1, GPT-Codex-max, and Gemini 3 focus on control, repeatability, and decision quality.

Think of the split like this.

• Sam D

Output type: object-level meshes from images

Speed: fast once the system runs in your stack

Control: strong link to real photos, less control over style

• Hunyuan3D

Output type: full assets with textures from text or images

Speed: prompt to mesh in minutes

Control: strong prompt control, image control, manual edits on top

• Grok 4.1

Output type: plans, scripts, and tool calls

Speed: fast on ideas and multi-step flows

Control: high control over process, low over final pixels

• GPT-Codex-max

Output type: code for tools and jobs

Speed: strong for complex code bases

Control: fine control over automation and glue logic

• Gemini 3

Output type: briefs, prompts, docs, helper code

Speed: strong for cross-media reasoning

Control: helps you shape direction and quality gates

You choose a mix, not a single winner. Mesh engines handle volume. Reasoning models protect quality and repeatability.

How Do You Pick the Best AI 3D Model Generator for Your Use Case?

You pick a mesh engine for asset creation, then add reasoning models for planning and scale.

Use this simple path.

• For non-technical artists

Start with Hunyuan3D.

Use it for props, basic characters, and stylized items based on prompts and art.

Use Gemini 3 to write better prompts and simple checklists.

• For tech-heavy studios

Run Hunyuan3D and Sam D for bulk asset creation.

Use GPT-Codex-max to write Blender and engine scripts.

Use Grok 4.1 to design agents that call these scripts and tools on a schedule.

• For ecommerce teams

Use Hunyuan3D for simple product 3D assets from photos.

Use Gemini 3 to write product copy and shot lists.

Send renders to a video tool such as VidAU to create spin shots, product explainers, and ads.

Factor in three points for each choice.

How much money do you plan to spend on cloud runs?

” Strong your in-house 3D skills look today.

How far do you want automation to reach this quarter?

How Do You Build a Full 3D Content Pipeline Around These Models?

A solid modern pipeline uses Hunyuan3D and Sam D for assets, GPT-Codex-max and Grok 4.1 for automation, Gemini 3 for planning, and a video layer for final media.

Here is a simple six step flow.

- Brief and asset list

Use Gemini 3 or Grok 4.1 to collect art direction, asset lists, and naming rules.

Store everything in a shared doc or repo. - Generation

Run Hunyuan3D on prompts and reference art.

Run Sam D on image data that needs 3D forms.

Tag each output with use case, level, and scene. - Clean-up and validation

Ask GPT-Codex-max to build scripts that fix scale, normals, names, and formats.

Run checks on polygon counts, material slots, and UV issues. - Scene assembly

Use script support in Blender or your game engine.

Let GPT-Codex-max wire up tools that place assets and set LODs. - Review and iteration

Share renders with artists and leads.

Use Gemini 3 to summarise feedback and update prompt packs and briefs. - Rendering and video packaging

Render turntables and scene fly-throughs.

Send clips into VidAU or your main video tool to add captions, music, and aspect ratio changes for ads and social.

What Are The Current Limits of AI 3D Model Generators?

AI 3D model generators still miss on clean topology, complex rigs, exact style, and legal safety, so teams keep humans in review loops.

Key limits stay clear.

• Topology and animation

Meshes often come with triangles, uneven density, or odd edge flow.

Animators still need clean quad layouts for serious work.

• Textures and UVs

Systems sometimes create seams, stretched areas, or messy UV maps.

Artists fix layouts and repaint key parts.

• Style and brand rules

Large models drift in style over long runs.

You still need art direction and brand checks.

• Legal and data rights

Training data often remains unclear.

Studios run legal reviews on use cases, client rules, and markets.

• Infra needs

Large 3D runs need strong GPUs and good storage.

Studios still plan for capacity, queues, and jobs.

You reduce risk with clear prompts, template meshes, reference boards, and a fixed QA checklist on every batch.

How Do You Start With AI 3D Model Generators in One Week?

You start with one small test, then grow. One week is enough for a pilot.

Day 1

Pick a use case.

For example, props for one game level or three hero products for a shop.

Day 2

Run five prompts and five reference images through Hunyuan3D.

Save all outputs and note which prompts work best.

Day 3

Pick a small set of photos for Sam D.

Turn each object into a rough 3D version.

Day 4

Import the outputs into Blender or your engine.

Note issues with scale, topology, and textures.

Day 5

Ask GPT-Codex-max to write tiny scripts.

Start with scale fixes, renaming, and basic format export.

Day 6

Use Gemini 3 or Grok 4.1 to map an improved pipeline.

Decide which steps stay manual and which move into scripts or agents.

Day 7

Render a short turntable or scene fly-through.

Send the clip into VidAU or your chosen editor for captions, music, and resizing.

Show this small package to your team as a first proof.

Conclusion

Start with one AI 3D model generator that fits your main goal, then add one reasoning model to support planning and code for that small project.

Treat Sam D and Hunyuan3D as your asset engines. Treat Grok 4.1, GPT-Codex-max, and Gemini 3 as the brains that plan flows, write scripts, and maintain quality. Pick one use case this week, run a small test from prompt to mesh to video, and then decide how far you want to push your AI 3D model generator stack next.

FAQs

1. Do AI 3D model generators replace traditional 3D artists?

No. AI 3D model generators handle draft meshes and volume work, while artists still guide style, fix topology, build rigs, and light scenes. The best teams use AI to handle busy work, letting artists focus on key shots and hero assets.

2. Which AI 3D model generator suits games best?

Hunyuan3D suits game props and draft characters from prompts and art. Sam D helps with props from photos. Game teams often pair these engines with GPT-Codex-max scripts and Grok 4.1 agents, so they keep real control over scenes and quality.

3. What hardware do I need for Hunyuan3D and Sam D?

Cloud versions cut local hardware needs, so a strong internet link and a standard work machine work for many tasks. For local runs you still need a modern GPU, enough VRAM, and storage for groups of meshes, textures, and scenes.

4. How do I keep AI 3D output safe for legal use?

Use clear rules from your company and clients. Avoid prompts that target live brands, faces, or IP without rights. Run all output through legal review for big projects. Keep logs of tools, prompts, and edits, so you can prove how each asset came to life.

5. How do I link AI 3D models to video and marketing tools?

Export clean meshes into Blender or your engine, then render spin shots or short scenes. Send the clips into a video tool such as VidAU. Add captions, music, overlays, and resize for TikTok, Reels, YouTube, and product pages.