Zero to Viral: A Complete Beginner’s AI Video Creation Workflow Using Runway, Sora, Kling, and ComfyUI

Never used AI video tools before? Here’s your complete roadmap—from a blank folder on your computer to a polished, platform-optimized AI video ready to go viral.

This guide is designed for absolute beginners. You don’t need coding skills, prior editing experience, or deep AI knowledge. What you do need is a clear, end-to-end workflow that removes guesswork and replaces it with repeatable steps. By the end, you’ll understand how professional creators turn still images into cinematic AI videos using AI Video tools like Runway, Sora, Kling, and ComfyUI—and how they optimize those videos for social platforms.

1. Choosing Source Photos That AI Video Models Love

Before touching any AI video tool, your results are largely determined by the quality of your source images. AI video models operate in latent space, meaning they infer motion, depth, and continuity based on visual patterns. Garbage in still means garbage out.

What Makes a Good Source Image?

For beginners, aim for images with:

- Clear subjects: One main person or object centered in the frame

- High resolution: Minimum 1024×1024 where possible

- Consistent lighting: Avoid harsh shadows or blown-out highlights

- Natural depth: Background separation helps motion synthesis

AI video models rely heavily on latent consistency—the ability to maintain the same visual identity across frames. Busy backgrounds, multiple faces, or distorted anatomy increase temporal instability (flickering, warping, face drift).

Photos vs. AI-Generated Images

You can start with:

- Real photos (from your phone or camera)

- AI-generated images (Midjourney, DALL·E, Stable Diffusion)

For beginners, AI-generated images often perform better because they are already “AI-native.” If you’re using Stable Diffusion or ComfyUI to generate images, lock in Seed Parity—reuse the same seed when generating variations. This helps maintain character consistency when transitioning to video.

Composition Tips for Motion

Think like a director:

- Leave space around the subject for movement

- Avoid extreme close-ups for your first videos

- Use portrait orientation (9:16) if targeting TikTok, Reels, or Shorts

A well-composed still image gives video models more room to hallucinate believable motion rather than chaotic deformation.

2. A Step-by-Step AI Video Workflow From Image to Motion

Now let’s build your actual AI video pipeline. This is a beginner-friendly workflow that mirrors what advanced creators use—just simplified.

Step 1: Choose Your AI Video Engine

Each tool has strengths:

- Runway Gen-3: Best for beginners, clean UI, strong image-to-video

- Sora: Exceptional realism and physics (limited access)

- Kling: Strong character motion and cinematic movement

- ComfyUI: Maximum control, steep learning curve

If this is your first AI video, start with Runway. You can move to Kling or ComfyUI later.

Step 2: Image-to-Video Setup (Runway Example)

- Upload your source image

- Select Image to Video

- Choose a duration (4–6 seconds is ideal for beginners)

- Set motion strength to medium

Avoid maxing out motion sliders. Excessive motion breaks latent coherence and introduces jitter.

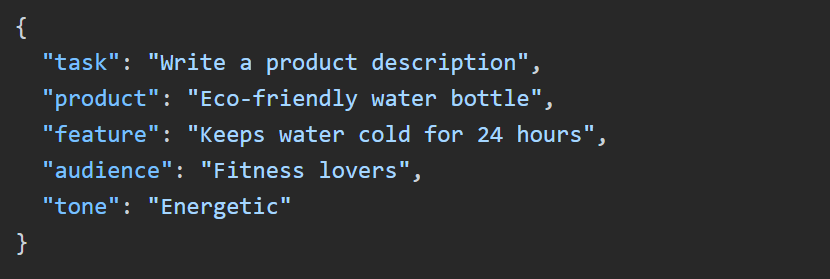

Step 3: Prompting for Motion (Not Style)

A common beginner mistake is over-prompting.

Bad prompt:

> cinematic, ultra realistic, 8k, dramatic lighting

Good motion-focused prompt:

> subtle camera push-in, natural breathing, gentle hair movement, stable face

You are not re-describing the image. You are describing how it should move.

Step 4: Understanding Temporal Stability

AI video models generate frames sequentially. Poor temporal stability results in:

- Flickering faces

- Warping backgrounds

- Melting hands

To reduce this:

- Keep clips short

- Avoid fast camera moves

- Maintain a single subject

Advanced tools like ComfyUI allow you to control Euler a schedulers, denoising strength, and frame interpolation. For beginners, trust presets—but know these exist as you level up.

Step 5: Multiple Takes Are Normal

Professionals rarely use the first generation.

Generate:

- 3–5 variations per image

- Keep the best 1–2

- Discard the rest

This is normal. AI video creation is curation, not one-click magic.

3. Post-Production, Optimization, and Publishing for Viral Reach

Your AI video isn’t finished when the model stops rendering. Post-production is where viral potential is unlocked.

Step 1: Basic Editing

Use beginner-friendly editors:

- CapCut

- DaVinci Resolve

- Premiere Pro (optional)

Edit for:

- Tight pacing (remove dead frames)

- Loopable endings

- Visual clarity in the first 1 second

Step 2: Upscaling and Frame Smoothing

Many AI videos output at lower frame rates or resolution.

Options:

- Runway’s built-in upscaler

- Topaz Video AI

- Frame interpolation to 30 or 60fps

This improves perceived quality dramatically, especially on mobile.

Step 3: Platform Optimization

Each platform has its own algorithmic preferences.

TikTok / Reels / Shorts

- 9:16 aspect ratio

- 5–8 seconds performs best

- Instant motion in first frame

- Subtle loop (end matches beginning)

YouTube

- Longer sequences (10–30 seconds)

- Strong narrative or transformation

Export with platform-native resolutions and avoid heavy compression.

Step 4: Captions, Context, and Posting

AI videos go viral when viewers understand them instantly.

Add:

– A single-line caption

– Context in the first 2 seconds

– Minimal text overlays

Example caption:

> This entire video was generated from one image using AI

Curiosity drives watch time. Watch time drives reach.

Step 5: Iteration Is the Real Secret

Your first video won’t be perfect—and that’s expected.

Track:

- Hook retention

- Replays

- Comments asking “how was this made?”

Each upload teaches you what to improve next.

Final Thoughts

AI video creation isn’t about mastering every AI video tools—it’s about mastering the workflow. Start with strong images, use beginner-friendly platforms like Runway, respect the limits of latent consistency, and polish your output for the platform you’re posting on.

This same workflow scales from your first video to your hundredth. The difference isn’t talent—it’s repetition.

Frequently Asked Questions

Q: Do I need a powerful computer to create AI videos?

A: No. Most beginner-friendly AI video tools like Runway, Sora, and Kling run entirely in the cloud. You only need a basic computer and a stable internet connection.

Q: What is latent consistency and why does it matter?

A: Latent consistency refers to how well an AI model maintains visual identity across frames. High latent consistency prevents flickering faces, warping objects, and unstable motion.

Q: Should beginners use ComfyUI?

A: ComfyUI offers maximum control but has a steep learning curve. Beginners should start with Runway or Kling and move to ComfyUI once they understand AI video fundamentals.

Q: How long should my first AI videos be?

A: For social platforms, 4–8 seconds is ideal. Short clips reduce visual artifacts and perform better algorithmically.

Q: Why does my AI video look unstable or jittery?

A: Common causes include excessive motion prompts, complex backgrounds, long clip durations, or poor source images. Simplifying inputs usually fixes the issue.