2026 AI Video Trends: Data-Driven Strategies, Tools, and Formats That Are Actually Going Viral

These AI video trends styles are dominating 2026 – are you using them?

The AI video landscape is evolving at a pace that makes last year’s viral formats look primitive. What worked in early 2025—hyper-real spectacle clips, surreal morph transitions, abstract diffusion art—has been replaced by more structured, character-driven, retention-optimized formats.

If you’re a content strategist, AI creator, or trend-focused YouTuber, the real challenge isn’t access to tools. It’s understanding what patterns are statistically dominating and how to reproduce them systematically using the right pipelines.

This is a data-driven breakdown of what’s actually going viral in 2026—and how to architect your workflow around it.

1. From Spectacle to Story: The Rise of Character-Driven AI Narratives

The Death of “Pure Spectacle” Content

In 2024–2025, AI spectacle content dominated feeds:

- Infinite zooms

- Surreal morph loops

- Hyper-detailed fantasy sequences

- Random prompt-based aesthetic experiments

These relied heavily on diffusion novelty. Creators leaned on high CFG scales, Euler a schedulers for sharp detail, and extreme prompt stacking to produce visual density.

But in 2026, novelty alone no longer drives retention.

Short-form platforms now algorithmically prioritize:

- Watch time completion

- Rewatches

- Comment-driven engagement

- Character recognition across uploads

The result? Character-driven AI narratives outperform pure spectacle by a measurable margin.

Why Character Consistency Wins

Audiences now follow AI characters the way they follow human creators.

This shift was unlocked by improvements in:

- Seed Parity across shots

- Latent Consistency models

- Multi-frame conditioning

- Cross-shot embedding locking in tools like Sora and Kling

Instead of generating disconnected scenes, creators now:

- Lock a base seed

- Anchor identity via reference frames

- Maintain facial embeddings across sequences

- Use low-denoise strength image-to-video passes for continuity

In ComfyUI, this often looks like:

- Reference-only control nodes

- IPAdapter-based character locking

- Consistent VAE usage across clips

- Fixed noise seeds for sequential scene coherence

The result is not just visual cohesion—it’s narrative continuity, which increases session time and subscriber conversion.

What’s Going Viral Now

The dominant formats:

1. AI Mini-Series (30–90 seconds episodic arcs)

Recurring characters, cliffhangers, serialized world-building.

2. POV Simulations with Emotional Hooks

“POV: You’re the last human in 2089” — but now with consistent protagonist design.

3. AI Character Commentary Channels

Fully synthetic personalities reacting to trends using AI voice + consistent video avatars.

4. Hybrid Creator + AI Co-Presence

Real creator appears alongside a persistent AI character.

Spectacle is still used—but as a narrative amplifier, not the core value.

If you’re still publishing standalone aesthetic clips with no recurring identity, you’re competing in a declining retention tier.

2. The New Sweet Spot: Optimal Length, Structure, and Format

Trend data across Shorts, Reels, and TikTok reveals three dominant timing archetypes.

Format A: 18–25 Seconds (Loop-Optimized)

Best for:

- High-concept POV

- Micro-twists

- Loopable reveals

Technical characteristics:

- Single environment

- 1–2 camera moves

- Latent Consistency model for motion stability

- Low jitter, minimal frame interpolation artifacts

These often use:

- 16:9 generated → center-cropped to 9:16

- Controlled motion strength to prevent morph drift

- Subtle camera parallax via depth estimation

The key is seamless looping. The last 1.5 seconds must visually rhyme with the first.

Format B: 35–55 Seconds (Narrative Burst)

This is currently the highest-performing range.

Why?

- Long enough for emotional arc

- Short enough for high completion

- Perfect for 3-act micro-story structure

Winning structure:

- 0–3 sec: High-contrast hook (visual or emotional)

- 3–25 sec: Escalation

- 25–45 sec: Twist or reveal

- Final 5 sec: Cliffhanger or identity reinforcement

Technical execution often includes:

- Multi-shot Sora sequences with consistent conditioning

- Kling for cinematic motion realism

- Runway for rapid iteration and style testing

Creators are now building scene stacks instead of single prompts:

- Scene 1: High-detail static

- Scene 2: Motion pass

- For Scene 3: Reaction close-up

- Scene 4: Stylized payoff

The important shift: each shot is deliberately engineered for retention spikes.

Format C: 2–4 Minute YouTube AI Narratives

Long-form AI videos are back—but not as abstract art films.

Instead, high-performing formats include:

- Documentary-style AI reconstructions

- Fictional AI history timelines

- AI-generated “what if” simulations

In long-form, creators use:

- Lower CFG scales for realism

- Controlled denoising to avoid flicker

- Temporal consistency pipelines

- AI voice models with emotional parameter tuning

The difference between amateur and viral long-form content is motion coherence.

If your frames exhibit:

- Micro-flicker

- Texture crawling

- Facial deformation drift

Retention drops dramatically.

Professionals now combine:

- Latent Consistency Models (LCM)

- Frame interpolation passes

- Post-generation stabilization

- Grain overlays to mask diffusion artifacts

Length alone doesn’t win. Stability does.

3. The Tools Powering 2026

The tools haven’t just improved—they’ve redefined workflow architecture.

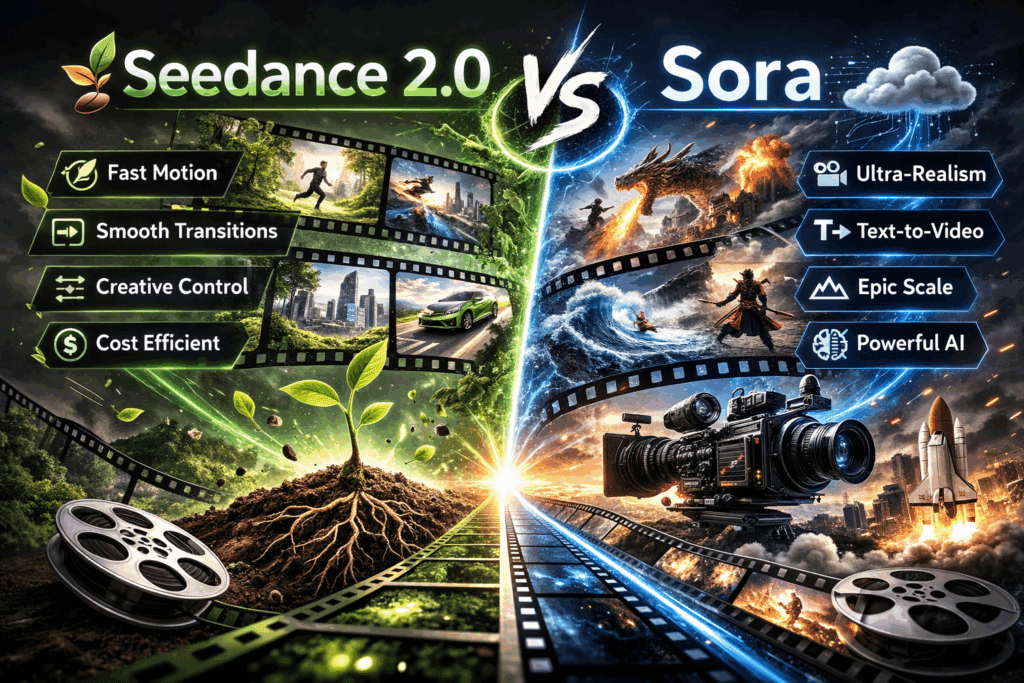

Sora: Narrative Control and World Cohesion

Sora dominates for:

- Multi-scene continuity

- Real-world physics consistency

- Cinematic framing

Its advantage lies in temporal understanding. Instead of stitching frames, it models motion across time. That reduces the need for heavy post-processing.

Best use cases:

- Episodic storytelling

- Dialogue-driven AI narratives

- Character arcs with location changes

Limitation: Iteration speed is slower than lightweight diffusion workflows.

Kling: High-Fidelity Motion Realism

Kling excels in:

- Cinematic camera movement

- Realistic human motion

- Natural environmental physics

Creators use Kling when realism is essential—especially for:

- Documentary simulations

- AI recreations of historical events

- Emotional close-ups

Its motion consistency makes it ideal for narrative bursts in the 35–55 sec range.

Runway: Rapid Trend Prototyping

Runway remains the fastest environment for:

- Concept testing

- Style iteration

- Short-form experimentation

Trend-focused YouTubers use Runway to validate:

- Hook strength

- Visual aesthetic

- Thumbnail frames

Once validated, they upscale production in Sora or Kling.

Think of Runway as the A/B testing lab.

ComfyUI: The Power User’s Viral Engine

While consumer tools dominate headlines, ComfyUI is the backend weapon of serious creators.

In 2026, advanced workflows include:

- Custom node graphs for seed locking

- IPAdapter for character preservation

- ControlNet depth + pose stacking

- Euler a vs DPM++ scheduler testing for texture fidelity

- Batch rendering for episodic consistency

Creators building recurring characters often:

- Train LoRAs on character identity

- Lock base embeddings

- Use fixed seed groups for scene families

- Apply minimal denoise strength between shots

This creates what audiences perceive as “the same person,” even across radically different environments.

That perception builds brand equity.

The Meta Trend: Engineering Retention, Not Just Visuals

The biggest shift in 2026 is psychological, not technical.

Viral AI video is no longer about:

– “Look what AI can do”

It’s about:

– “I need to see what happens next”

Winning creators combine:

- Character continuity

- Emotional escalation

- Retention-optimized timing

- Tool-specific strengths

- Post-processing stabilization

If you’re struggling to keep up with rapidly evolving trends, stop chasing aesthetics.

Instead:

- Pick one recurring character.

- Lock their identity technically (seed + reference workflow).

- Choose a repeatable 35–55 sec structure.

- Use Runway for prototyping.

- Try using Kling or Sora for production.

- Use ComfyUI for character control at scale.

The creators dominating 2026 aren’t experimenting randomly.

They’re building systems.

And systems scale.

If you want to stay viral this year, the question isn’t “What’s trending?”

It’s:

What repeatable AI video engine are you building?

Frequently Asked Questions

Q: Are pure AI spectacle videos completely dead in 2026?

A: No, but they no longer sustain long-term growth. Spectacle works best as a hook or narrative amplifier. Channels relying solely on abstract diffusion visuals without recurring characters or story arcs see lower retention and weaker subscriber conversion.

Q: What is the most optimal video length for AI-generated content right now?

A: The 35–55 second range currently performs best for short-form platforms because it allows for a structured emotional arc while maintaining high completion rates. For long-form YouTube, 2–4 minutes with strong narrative cohesion and motion stability is outperforming longer experimental formats.

Q: How do I maintain character consistency across AI-generated scenes?

A: Use seed locking, IPAdapter or reference-based conditioning, consistent VAE selection, and low denoise strength for scene transitions. In advanced workflows like ComfyUI, combining LoRA-trained character models with fixed seed groups significantly improves cross-scene identity retention.

Q: Which AI video tool should trend-focused creators prioritize?

A: Use Runway for rapid testing and iteration, Kling for realistic motion and cinematic quality, Sora for narrative continuity and multi-scene storytelling, and ComfyUI for advanced character control and scalable production workflows.