Best Text to Video Local Model in 2025

Everyone searching for the best text to video local model wants the same result right now. One clean prompt. Long, smooth video. No credits. No paywalls. Full privacy on your own GPU.

YouTube keeps pushing “FREE and unlimited AI video” tutorials. Reddit threads chase a “completely free text to video generator” and hit the real limits fast, from VRAM errors and flicker to exports that crash on longer clips.

This guide breaks down what creators actually use in 2025, which local models hold up today, and how to match the right setup to your GPU, workflow and budget.

What should you know before chasing a “completely free” text to video model?

You need to set expectations before you search.

Video generation burns GPU memory, storage and power. Reddit replies to the “fully free text to video” question point out a simple truth. If a website offers uncapped video output with no payment, either ads or hidden limits cover the bill, or quality sits far below current standards. Running models locally pushes that cost to your own hardware instead.

To run modern text to video models with decent speed you need:

- A recent NVIDIA GPU, ideally 8 GB VRAM or more

- Enough SSD space for several gigabytes of model weights

- Patience for first time setup, drivers and workflow tweaks

Once this setup exists, local models feel close to “free at the margin” for small creators, because each extra video uses electricity and time, not new subscription fees.

Which text to video local models stand out in 2025?

Several open source or locally deployable models now produce strong results. Many workflows wrap them inside ComfyUI so you run everything on your own GPU.

Wan 2.2 – strong general text to video for local rigs

Wan 2.2 from Wan-AI appears in nearly every “best open source video model” list. It ships in multiple sizes, including a 14B text to video model for high quality work and a 5B hybrid model that supports both text to video and image to video.

Strengths

- High quality motion and scene consistency for open source

- Hybrid models that take text or images as input

- Official ComfyUI workflows and documentation

Limits

- Larger versions need strong hardware

- Long clips still require stitching and timing work

HunyuanVideo – cinematic focus from Tencent

HunyuanVideo from Tencent targets cinematic movement and rich detail. It features in multiple 2025 roundups of top text to video models for story content.

Strengths

- Smooth camera paths

- Good handling of people and environments

- Strong support inside ComfyUI workflows

Limits

- Heavy downloads and higher VRAM needs

- Some prompts still produce flicker in complex motion

Mochi and LTX-Video – lighter models for experiments

Mochi 1 and LTX-Video appear in ComfyUI tutorials as efficient options for creative tests.

Strengths

- Less VRAM pressure than huge models

- Good for stylised, abstract clips

- Helpful for users with single mid-range GPUs

Limits

- Less detail and realism than Wan 2.2 or HunyuanVideo

- Shorter ideal duration per clip

Local front end – ComfyUI as the real control layer

Most creators who search “best text to video local model” end up with ComfyUI at the core of their stack. It is an open source node-based interface that runs locally and connects to many models at once.

ComfyUI provides:

- Visual workflows for text to video, image to video and upscaling

- Support for models like Wan 2.2, HunyuanVideo, Mochi and more

- Space to tune batch size, frame count, tiling and VRAM usage

This front end is what turns raw models into a usable tool for daily work.

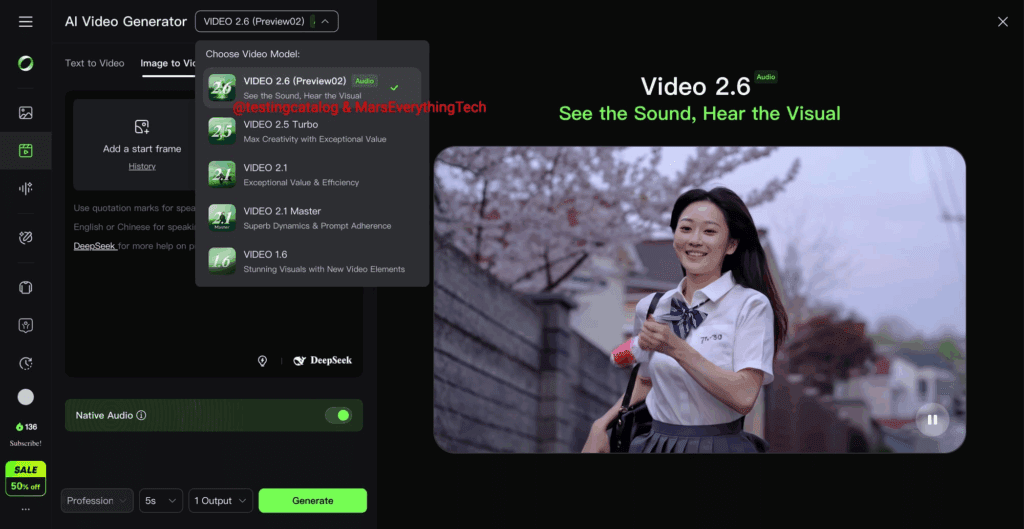

How do local text to video models compare with cloud tools like Runway and Pika?

Many users ask why they should bother with local models when Runway, Pika, Luma or Sora exist. The honest answer is that both sides serve different needs.

Here is a quick comparison that reflects current roles in 2025.

Tool comparison for AI video and text to video local models

| Tool / model | Best use case | Main strengths | Key limits |

| Wan 2.2 (local) | General creative clips on your own GPU | Strong open source text to video, solid motion consistency, ComfyUI workflows | Heavy setup, high VRAM for top quality, clip length still short |

| HunyuanVideo (local) | Cinematic shots and stylised stories | Rich detail, smooth camera moves, good people handling | Large downloads, higher GPU needs, flicker on tricky motion |

| Mochi / LTX-Video (local) | Stylised shorts on mid-range hardware | Lower VRAM needs, fun for experiments and edits | Less realism, lower resolution, more prompt tuning |

| Runway Gen-3 / Gen-4 (cloud) | Professional ads, branded content, 4K masters | Polished output, camera controls, commercial terms, easy web UI | Subscription cost, clip duration limits, data on external servers |

| Pika 1.0 (cloud) | Fast social clips and loops | Strong style options, quick experiments, easy sharing | Lower resolution than Runway, shorter clips, Discord workflow for many users |

Local models trade time and hardware for control and privacy. Cloud tools trade fees and data sharing for convenience and 4K polish.

How do you pick the best text to video local model for your setup?

The best choice depends on your goal, GPU and tolerance for friction.

Step 1 – define your main target

Ask yourself a precise question:

- Short social clips under 10 seconds

- Longer B-roll to mix inside edits

- Full scenes for narrative projects

- Tests for research or tech content

Most YouTube videos in your research focus on short clips with strong quality or long clips stitched from many generations. Match your goal to one of those paths.

Step 2 – match model to hardware

Use rough guidance like this:

- 8 GB VRAM: lighter options such as Wan 2.2 5B or Mochi, lower frame counts, smaller resolutions

- 12–16 GB VRAM: Wan 2.2 larger models or HunyuanVideo at modest frame counts

- 24 GB or more: higher resolutions, more aggressive settings, longer sequences

Reddit feedback on tools like SeedVR2 shows that long videos strain VRAM and system RAM if workflows try to hold entire clips in memory. Proper tiling, batch settings and caching reduce this pain, yet long-form text to video still needs careful planning.

Step 3 – accept that “free and unlimited” has conditions

Local models feel free after setup, yet hardware, time and electricity place real limits. Also, many open source models still rely on checkpoints under mixed licenses, so commercial usage requires attention to terms.

Use this mental rule.

Subscriptions cover someone else’s GPU. Local models lean on yours. Decide which bill fits your situation better.

So what is the best text to video local model in 2025?

There is no single winner for every user, yet trends point to a simple split.

- Wan 2.2 looks like the default open source choice for creators with a strong GPU who want balanced text to video quality.

- HunyuanVideo fits users who focus on cinematic shots and rich motion.

- Mochi, LTX-Video and similar models suit mid-range cards and stylised work.

For many people the real “best text to video local model” is a ComfyUI setup that holds at least two of these models, plus a workflow for upscaling and light post work. That stack covers more prompts, more budgets and more projects than any single checkpoint on its own.

Conclusion

If you want full control, privacy and long runtimes, a local setup still makes sense. The best text to video local model for you is the one your GPU can handle, that fits your workflow and that you understand well enough to troubleshoot.

If you care more about speed and ease than hardware, cloud tools still win for now. Use local models for experiments and longer films, and use hosted tools when deadlines and simplicity matter more than control. That mix keeps you flexible as the tech changes.

Frequently Asked Questions

Is a completely free and unlimited text to video generator realistic today?

Not really. Video generation costs GPU time and energy. Most online tools limit credits or resolution so they stay sustainable. Local models feel nearly free after hardware purchase, yet that cost still exists.

Which text to video local model needs the least VRAM?

Lighter options such as Wan 2.2 5B, Mochi and some LTX-Video setups work on 8 GB cards, as long as you keep frame counts and resolution modest. Larger models and 4K clips require more memory.

Are Runway and Pika still worth it if I run local models?

Yes. Runway Gen-3 or Gen-4 and Pika 1.0 deliver polished, fast results for many commercial jobs and social clips. Local models excel at control, privacy and cost over time, while cloud tools shine for speed and client-ready output. Many creators mix both.

How long of a video do local text to video models handle today?

Most workflows still target clips under 5–10 seconds per generation. Longer projects come from stitching many clips inside editors such as DaVinci Resolve, Premiere Pro or Final Cut. Research projects push longer runs, yet VRAM and time requirements stay high.

What should I focus on first as a beginner with local models?

Start small. Install ComfyUI, run an official Wan 2.2 or similar workflow on short clips and note how each setting changes the result. Once that feels stable, extend duration, resolution and scene complexity. Experiment with only one variable at a time so you avoid confusion.