5 Best Text to Video Local Models to Create Unlimited Content for Free

Text-to-video tools now help creators generate long clips without high cost. Local models make this easier because they run on your system or in a private cloud. You get control, privacy, and unlimited output. This guide shows the best text to video local model options today and how to use them to create endless content, AI ads, VFX shots, and long videos for free.

What Is the Best Text to Video Local Model Today?

The best text-to-video local models focus on motion stability, long-scene memory, clean rendering, and compute efficiency. Models like Kling 2.6, Wan 2.2, and Hunyuan Video push new standards with better tracking and improved frame-to-frame coherence. Nano Banana’s “God Mode” upgrade also helps creators generate unlimited clips without quality loss.

Why Local Models Matter for Creators

Local models keep your workflow private. They help you avoid API limits. They also support automation. This makes them useful for large content batches, VFX tests, and AI Ads.

Benefits for AI Ads and VFX Workflows

Local models let you generate quick VFX shots. You can produce transitions, motion clips, and product scenes in seconds. This removes the need for large studio setups.

The 5 Best Local Models for Text to Video

These models deliver strong results and support unlimited use in local or self-hosted setups.

1. Kling 2.6 for Long Video Motion

Kling 2.6 creates smooth, stable scenes. It supports long video paths and better camera motion. This helps when you need 10 to 20-second clips without breaks.

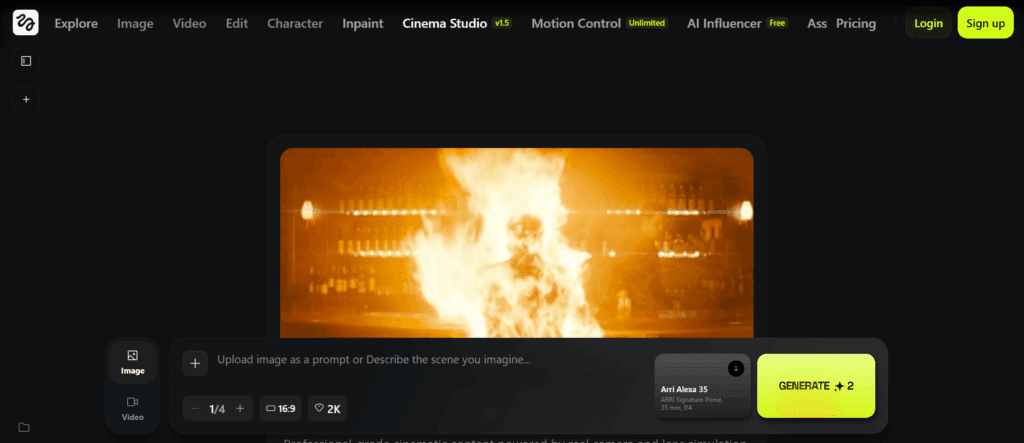

2. Nano Banana Video Model (“God Mode”)

Nano Banana’s upgraded video engine handles clean motion and sharp detail. It supports unlimited generations. This helps when you want high-volume video output from simple prompts.

3. Hunyuan Video for Creative Control

Hunyuan Video reads text with strong accuracy. It blends style and movement well. It is one of the most flexible open-source systems for creative videos.

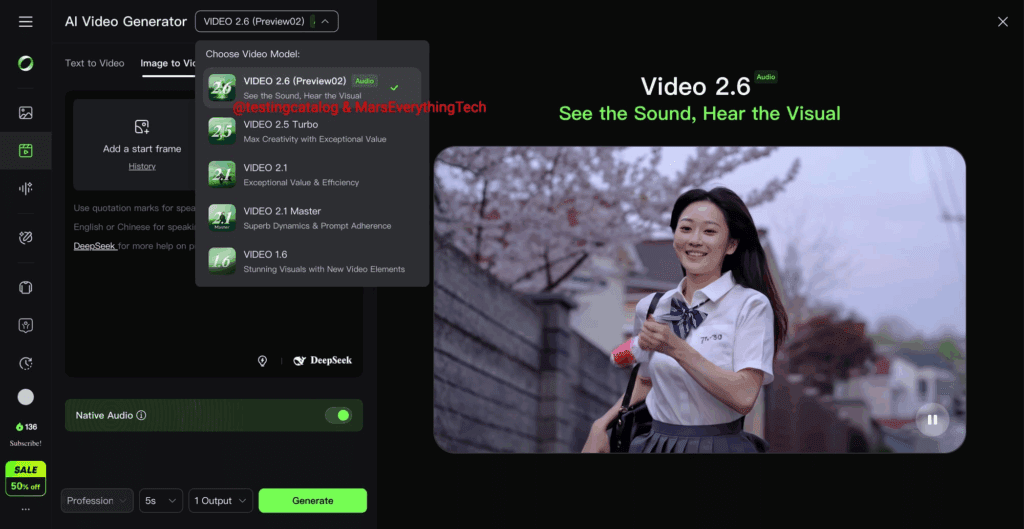

4. Wan 2.2 T2V for Realistic Faces

Wan 2.2 is strong for realism. It stabilizes faces and keeps them consistent. This makes it great for UGC ads, talking scenes, and product demos.

5. Mochi 1 for VFX in Seconds

Mochi 1 builds fast clips with clean transitions. It is good for motion tests and small VFX shots. You can test scenes and effects without complex pipelines.

How to Make Long AI Videos for Free

You can generate long videos by splitting your script into smaller sections and linking them with a stable motion model.

Step 1. Use a Model With Scene Memory (Kling 2.6 or Hunyuan)

These tools keep objects and people stable across scenes. They reduce jitter and flicker.

Step 2. Split Your Script Into Short Scenes

Generate short clips of 3 to 5 seconds. This improves render quality and lets you control each part of the story.

Step 3. Stitch and Upscale With Free Tools

You can merge clips using ffmpeg.

VidAU helps you clean the output with resizing, captions, and audio.

The Only 5 Prompts You Need to Create Any Text to Video Clip

These prompt types work across all major local models and help control your final video.

Action Prompt

Describe the main movement. Example: “walking forward with steady steps.”

Camera Prompt

Guide how the shot moves. Example: “slow zoom in.”

Lighting Prompt

Improve clarity. Example: “soft daylight on the face.”

Environment Prompt

Set the location. Example: “urban street at night.”

Motion Path Prompt

Control direction. Example: “camera moves left while subject turns right.”

Comparison Table of the Top Local Video Models

Here is a simple view of how the top models differ:

| Model | Strength | Speed | Best Use |

| Kling 2.6 | Long stable motion | Medium | Long videos, ads |

| Nano Banana Video | Unlimited output | Fast | Batch content |

| Hunyuan Video | Strong text control | Medium | Creative scenes |

| Wan 2.2 T2V | Realistic faces | Fast | UGC + talking clips |

| Mochi 1 | VFX in seconds | Very fast | Short shots, tests |

This table helps you choose a model based on your workflow.

How VidAU Helps You Turn Model Outputs Into Full Videos

Local models generate clips, but VidAU helps polish them.

Resize and Format

Make videos fit TikTok, Instagram, or YouTube.

Add Captions and Audio

Increase engagement with clear, synced text and strong sound.

Clean Up Visual Errors

Remove flicker or color issues before posting.

Conclusion

Text-to-video local models now deliver stable, detailed clips without high cost. Kling 2.6 brings long-scene motion. Nano Banana supports unlimited output. Wan 2.2, Hunyuan Video, and Mochi 1 help with realism, control, and fast VFX shots. Use the five core prompt types to shape each clip. Then use VidAU to clean, resize, and prepare your video for posting. With this workflow, you can build unlimited content from simple text.

FAQs

1. Which local text to video model is best for long videos?

Kling 2.6 and Hunyuan Video work best for long clips. They keep details consistent and handle stable motion across many frames.

2. Can Nano Banana really make unlimited videos?

Yes. The “God Mode” upgrade allows high-volume generation without strict limits, which helps creators who need many clips per day.

3. What model is best for realistic faces?

Wan 2.2 is the strongest option. It stabilizes facial features and produces natural skin detail in video frames.

4. Do local models cost money to run?

Most open-source models are free, but you need enough compute. You can run them on a local GPU or a low-cost cloud setup.

5. How does VidAU fit into a local video workflow?

VidAU handles the final steps. It adds captions, audio, and formatting. This makes your clips ready for ads or social platforms.