Top 5 AI Image-to-Video Generators for Experimental Design Creatives

If you run fast, careful tests, AI tools stop being toys and start paying off. This guide shows the five experimental design platforms that help creative teams move from ideas to proof.

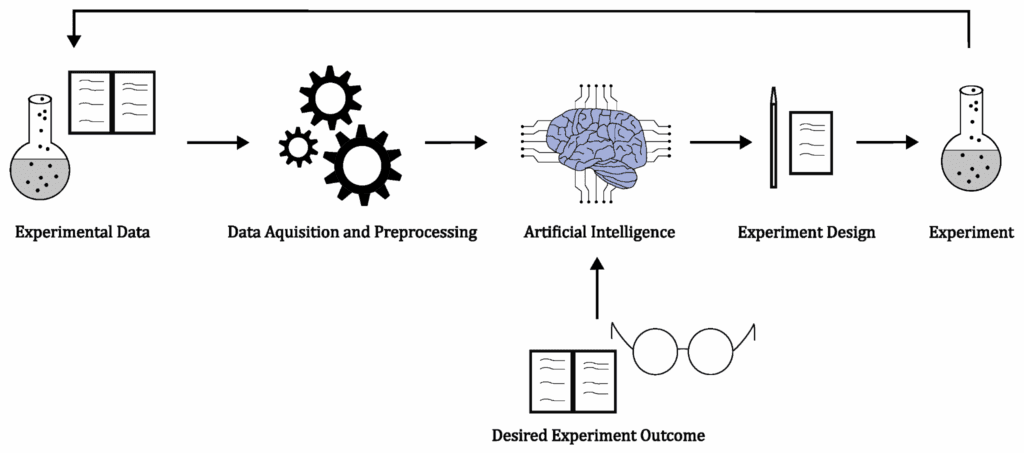

What Makes An AI Image-To-Video Tool Right For Experimental Design?

Therefore, select a tool that accelerates tests and remains stable during rapid loops. In experimental design work, you need repeatable outputs, clean logs, seed control, and easy versioning. Clear licenses also matter when you ship paid work.

Which Five Tools Stand Out Right Now?

Yes, these five AI tools are used in creative tests today. Use A/B testing to compare them on your real shots and deadlines.

Genmo — Open Video Generation, Quick Motion

Genmo’s Mochi-1 model focuses on fluid motion and strong prompt follow-through, which helps experimental design creatives see cause-and-effect in short runs. It offers an “open video” posture that plays well with tooling and reproducible tests.

Krea — Generate, Restyle, Upscale, And Lip-Sync

Krea makes it simple to go from idea to video, then enhance, restyle, or lip-sync. That clarity helps teams run tight loops without wrestling with the UI. It’s a practical pick when producers need neat exports and quick fixes during reviews.

Stable Video Diffusion — Research-Grade Image-To-Video

Stable Video Diffusion (SVD) turns a single frame into short clips with controllable frame counts and rates. That control lets experimental design teams test one factor at a time and compare motion quality across runs.

RunDiffusion — Managed Cloud Workspaces

RunDiffusion gives you ready cloud workspaces for diffusion tools, so teams can run heavier jobs without local setup. For producers, this means clean handoffs, tracked sessions, and fewer “it works on my machine” delays that break test cycles.

ComfyUI — Node-Based Control For Power Users

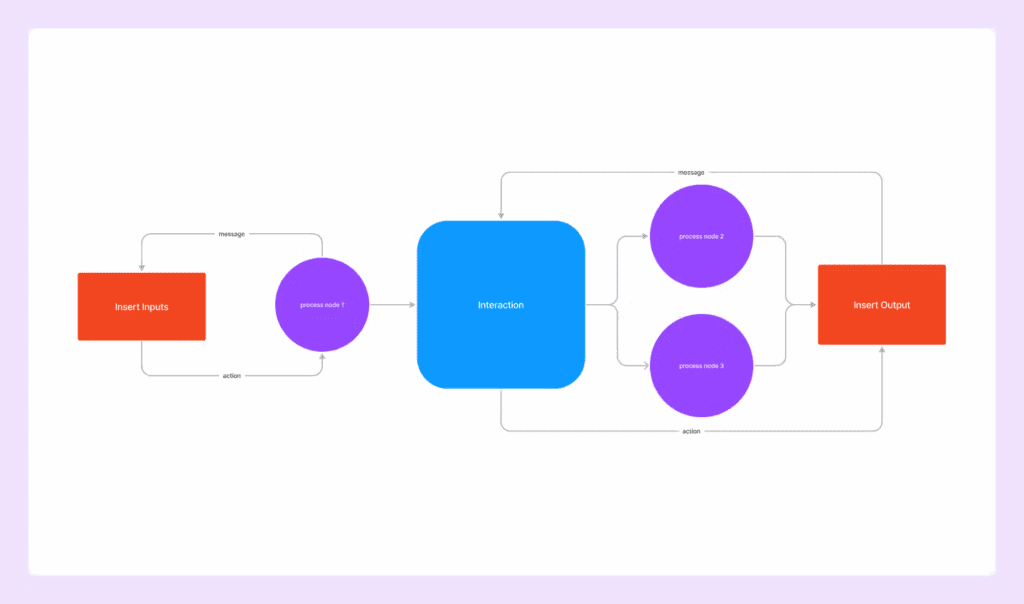

ComfyUI is a modular, node-based engine that lets you build and reuse precise pipelines. That structure is ideal when experimental design needs strict control, labeled inputs, and repeatable graphs across versions.

A/B Testing Checklist (Fast, Fair, Repeatable)

Then, keep A/B testing honest with short clips, fixed seeds, one variable per run, and side-by-side exports. Save metadata with filenames so your editor can confirm the winning settings later.

How Do These Tools Compare At A Glance?

Therefore, this table shows the key trade-offs for experimental design teams.

| Tool | Best for | Strengths that aid tests | Watch-outs | Licensing/Access |

| Genmo | Fast motion studies | Fluid motion, prompt adherence, handy “open video” ethos | Rapid model changes can shift looks between runs | Research preview / open model info on site. |

| Krea | On-deadline polish | Upscale, restyle, lipsync in one place; low UI friction | Power features may require paid tier | Freemium platform with enhancements. |

| Stable Video Diffusion | Controlled img-to-video baselines | Frame count and FPS control; research-grade docs | Short clip lengths; research-use limits for some weights | Model cards and license notes. |

| RunDiffusion | Heavy jobs in the cloud | Ready workspaces; fast GPU spin-up; fewer local issues | Ongoing instance cost; preset stacks | Hosted workspaces and guides. |

| ComfyUI | Repeatable pipelines | Node graphs, reusable workflows, community nodes | Steeper learning curve; version drift | Open-source; rich community docs. |

How Should You Design Your First Controlled Video Experiment?

First, start simple and control one factor per run. Change only one setting (seed, denoise, frame count, or guidance) and log it. This approach fits experimental design best because you can link results to a single cause, fast.

What Workflow Helps Experimental Design Creatives Move Faster?

Next, use short cycles, label runs, and log settings. Batch a small “truth set” of three shots (face, full-body, product). Then standardize prompts, keep seeds fixed, and render short clips first. This keeps your experimental design clean and costs low.

Run-label System (Quick Template)

- ShotID-Model-Preset-Seed-Var-v001.mp4

- Example: FD01-SVD-img2vid-s1234-denoise40-v003.mp4

File Hygiene Tips

Also, mirror that label in your Google Sheet or Notion. Link the clip, the prompt, and the exact params.

Where Does VidAU AI Fit Without Friction?

So, use VidAU AI to caption, repurpose, and version clips for hand-offs. You can trim, add safe-area text, and package variants for social or review. This keeps the team focused on creative tests while the asset flow stays tidy.

What Common Mistakes Slow Experimental Design in Video?

Then, teams slow down when they ignore controls and tracking. Skipping seeds, mixing model versions mid-test, and changing two settings at once all hide the reason a clip improved. Avoid that with a fixed baseline and a written run plan.

Can You Get Good Results On A Budget?

Yes, you can mix free and low-cost tools. Start with SVD for baselines, draft with Krea or Genmo, and move heavy lifts to RunDiffusion only when needed. Keep ComfyUI graphs for repeat work and share them with the team.

Tool-By-Tool Quick Starts

Genmo: 5-Minute Motion Probe

Pick a hero still, write a short action prompt, and render quick clips. Compare motion cues (camera sway, limb arcs) at fixed seeds to see how the model treats physics.

Krea: Polish At The End Of the Sprint

Upscale your best take, apply a light restyle, and use lipsync for talent reads. Export two clean sizes for review and social.

Stable Video Diffusion: Baseline Control

Lock frame count and FPS, then vary only the denoise steps. Export a contact-sheet video so reviewers can point at preferred motion.

RunDiffusion: Heavy Batch Night

Spin up a workspace, queue the truth set, and sleep on it. Tag runs by model build, so you can rerun next month without surprises.

ComfyUI: Repeat What Works

Save a clean graph once, reuse it forever. Expose only a few inputs (prompt, seed, strength) so producers can run it safely.

Copy-Paste Testing Matrix

| Shot | Goal | Variable (only one) | Seed | Notes | Winner |

| Face close-up | Keep identity stable | Denoise: 30 → 40 → 50 | 1234 | Watch lip corners, eye dart | |

| Full-body walk | Natural gait | Guidance: 3.5 → 5.0 → 6.5 | 5678 | Check foot slide, hip sway | |

| Product spin | Readable label | FPS: 12 → 18 → 24 | 1357 | Avoid blur; text clarity |

Conclusion

Experimental design thrives when creative teams work with the right tools, clear baselines, and repeatable methods. Genmo, Krea, Stable Video Diffusion, RunDiffusion, and ComfyUI each bring unique strengths that make controlled image-to-video testing practical instead of chaotic. By combining fast loops, seed control, and organized logging, you can turn early clips into reliable insights without wasting cycles. VidAU AI then closes the loop by packaging, captioning, and versioning outputs for smooth reviews and team hand-offs.

Frequently Asked Questions

1. How long should my first clip be?

Keep it under 4 seconds. Short tests save time and remove noise.

2. Which export format helps review?

Use MP4 H.264. It is small, shareable, and device-friendly.

3. When do I move to 4K?

After you lock motion and look. Upscale later to avoid wasted renders.

4. What FPS should I start with for short clips?

Begin at 12–18 fps. It reads as motion, renders fast, and keeps costs low.

5. How many frames should my first render use?

Start with 24–48 frames. You will see motion shape without long waits.

6. What bitrate works for review links?

Lock the seed and keep lighting stable. Change only one setting per run.

7. How do I keep identity consistent across takes?

Lock the seed and keep lighting stable. Change only one setting per run.