Higgsfield Cinema Studio: The AI Filmmaking Masterclass for Hollywood-Level Cinematography Without Film School

Higgsfield is changing what it means to be a filmmaker. Instead of relying on film school, expensive gear, or technical setups, creators now use Higgsfield AI to control motion, lens simulation, and visual continuity entirely within generative tools. From TikTok storytellers to YouTube filmmakers, more creators now use Higgsfield to direct professional-grade shots using nothing but a prompt, seed, and scene description.

This masterclass shows how to go beyond clip generation. You’ll learn the visual grammar of real cinematography and how to apply it across platforms like Runway, Kling, and Sora. Most tools stop at “make it cinematic.” Higgsfield teaches you how to direct cinematic sequences, not just generate footage.

Why Traditional Filmmaking Fails Modern Creators

The core challenge in filmmaking has never been creativity, it’s technical overhead. Traditional production demands mastery across lenses, lighting physics, stabilization systems, crew coordination, and post-production pipelines. Even a “simple” dolly shot requires rails, balancing, rehearsals, and budget. For solo creators or small teams, this complexity becomes paralyzing.

AI filmmaking flips this paradigm. Instead of learning how to physically operate equipment, creators learn how to describe cinematic intent to generative systems. The skill shifts from mechanical execution to visual orchestration.

However, most AI video tutorials stop at surface-level prompting. They teach how to generate clips, not how to direct them. The result? Pretty but unusable footage, no continuity, no cinematic logic, no narrative control.

Higgsfield Cinema Studio addresses this gap by introducing a structured, professional workflow that mirrors real film production, but executes entirely inside AI systems.

Higgsfield vs Traditional Filmmaking Workflows

Here is a direct comparison of traditional filmmaking steps versus Higgsfield AI workflows to show how the platform simplifies professional video creation:

| Workflow Stage | Traditional Filmmaking | Higgsfield AI Workflow |

| Shot Design | Requires cameras, lenses | Controlled through prompt engineering |

| Motion Control | Uses dollies, stabilizers | Simulated with motion vectors & paths |

| Visual Continuity | Demands lighting setups, sets | Achieved using seed parity & anchoring |

| Editing & Sequencing | Timeline-based editing software | AI-driven scene assembly tools |

| Cost & Iteration | High cost for each take | Low-cost, high-speed iterations |

The Three-Level AI Cinematography Workflow

At the heart of the masterclass is a structured three-level workflow that converts static images into controlled cinematic shots with consistency, motion, and editorial intent.

Level 1: Image Foundation (Visual DNA)

Every cinematic sequence begins with visual identity. In AI systems, this is established through high-fidelity image generation using Stable Diffusion, Midjourney, or Flux models inside ComfyUI.

Key concepts taught at this level include:

– Latent Consistency: Locking character appearance, wardrobe, lighting, and environment across generations by managing latent space continuity.

– Seed Parity: Reusing or interpolating seeds to preserve facial structure and scene geometry between shots.

– Prompt Anchoring: Separating immutable descriptors (character, environment) from mutable ones (camera angle, emotion).

Instead of generating dozens of random images, creators learn to build a controlled “visual bible” that functions like a production design document. These images become the source plates for animation in Runway Gen-3, Kling, or Sora.

This alone eliminates one of the biggest problems in AI filmmaking: visual drift.

Level 2: Shot Design and Camera Translation

Once the image foundation is locked, the workflow transitions into shot design—the language of cinema itself.

Rather than asking AI to “make it cinematic,” Higgsfield teaches creators to specify camera behavior using film grammar:

– Lens simulation (35mm vs 85mm equivalents)

– Depth of field control through prompt weighting

– Blocking and subject isolation

In Runway and Kling, this is where image-to-video tools shine. By animating stills with constrained motion prompts, creators can emulate:

– Push-ins

– Pull-backs

– Lateral tracking shots

– Handheld micro-jitter for realism

Advanced users leverage ComfyUI video nodes with Euler A schedulers and motion-strength curves to fine-tune temporal consistency. The goal is not movement for movement’s sake, but motivated camera motion that reinforces story beats.

This level teaches creators to think like cinematographers—using the camera as a narrative device, not just a generator.

Level 3: Cinematic Continuity and Sequence Assembly

A single beautiful shot is not a film. The final level focuses on sequencing, continuity, and editorial logic.

Key techniques include:

– Cross-shot Latent Matching: Maintaining character scale, directionality, and lighting continuity across multiple AI clips.

– Motion Vector Awareness: Ensuring camera movement direction aligns across cuts to avoid visual disorientation.

– Temporal Rhythm: Designing shot duration based on emotional pacing rather than default clip length.

Tools like Runway’s multi-shot editor or Sora’s scene expansion capabilities allow creators to assemble sequences that feel intentionally directed rather than algorithmically stitched together.

By the end of this level, creators are no longer “generating videos.” They are directing scenes.

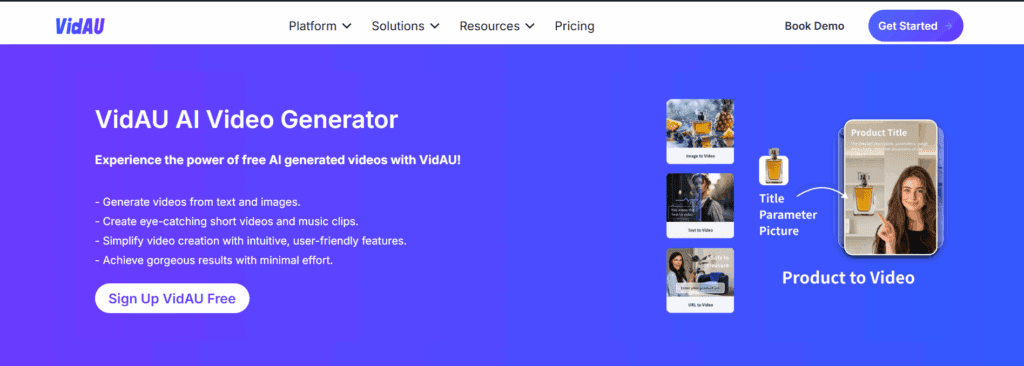

How to Use VidAU With Higgsfield for Cinematic Content

Follow these steps to connect Higgsfield’s cinematic output with VidAU’s fast editing system:

- Generate Cinematic Frames in Higgsfield

Use your prompt setup to create motion-ready visuals with seed locking and consistent design. - Export the Scenes

Output the generated frames or clips from Higgsfield-compatible tools like Runway or Sora. - Upload to VidAU

Import the content into VidAU’s editor to begin repurposing or styling. - Enhance With Text, Audio, and Effects

Add overlays, voice tracks, subtitles, or dynamic text to match your content goal. - Export for Reels, Shorts, or Ads

Format into vertical or horizontal layouts and download platform-optimized versions.

VidAU helps you deliver polished, cinematic content from your AI footage without extra software or manual editing chains.

CTA: Try VidAU Now

Motion, Camera Language, and Hollywood Results on a Creator Budget

One of the biggest myths in filmmaking is that cinematic quality comes from equipment. In reality, it comes from motion control and visual coherence.

AI tools excel here when used correctly.

Motion Control Without Rigs

In traditional cinema, motion requires hardware. In AI cinema, motion requires parameters.

Higgsfield teaches how to:

– Use camera path descriptors instead of vague motion prompts

– Control acceleration and deceleration curves in Kling and ComfyUI

– Simulate crane, dolly, and Steadicam shots through layered motion prompts

This allows creators to execute shots that would normally cost thousands of dollars in gear—using nothing but compute.

Budget Efficiency Through Iteration

Hollywood productions burn money through physical iteration. AI productions burn tokens—but tokens are cheap compared to reshoots.

Creators learn:

– How to downscale tests for motion blocking

– When to upscale only final shots

– How to preserve seeds and metadata for revision control

This mirrors professional VFX pipelines but at a solo-creator scale.

The New Cinematographer Skillset

The Higgsfield Cinema Studio masterclass reframes what it means to be a filmmaker in 2026:

– You don’t need to know how to build a dolly but you must know when a dolly shot is emotionally correct.

– No Need to Light a set but you must understand contrast, directionality, and mood.

– Crew not Needed but you must think like a director.

By mastering AI-native cinematography, creators gain access to a level of visual storytelling that was previously unattainable without institutional backing.

This is not the future of filmmaking.

It’s the present—finally accessible.

Frequently Asked Questions

Q: Do I need prior filmmaking experience to use Higgsfield Cinema Studio?

A: No. The workflow is designed for beginners while still teaching real cinematic principles. You learn film language through AI execution rather than traditional equipment.

Q: Which AI tools are required for this workflow?

A: The core tools include Runway Gen-3, Kling, Sora (where available), and ComfyUI for advanced users. The concepts apply across platforms.

Q: How does this differ from basic AI video prompting tutorials?

A: Most tutorials focus on generating clips. Higgsfield focuses on directing shots, maintaining continuity, and assembling cinematic sequences using professional film logic.

Q: Can this workflow be used for YouTube or short-form content?

A: Yes. The workflow scales from short-form storytelling to long-form cinematic projects while maintaining visual consistency.

Q: Is this replacing traditional filmmaking skills?

A: It replaces mechanical execution, not artistic judgment. Understanding storytelling, composition, and motion remains essential.