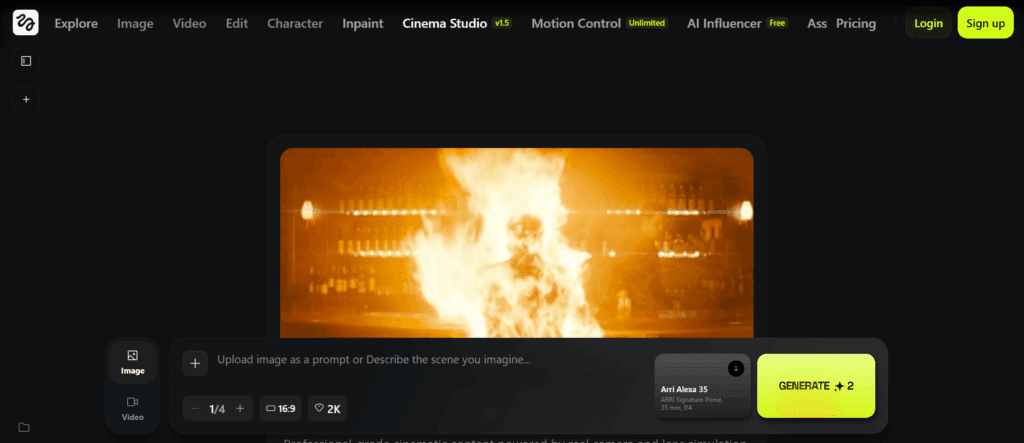

Top Image to Video AI generator Showdown: Which Tool Reigns Supreme?

This weekend, I decided to put all the major image to video AI generator to the test. I used the exact same prompts across different platforms to see which ones actually deliver the best results for both text-to-video and image-to-video.

Key Takeaways

- Weavy AI is a powerful tool for comparing multiple AI video generators in one place, simplifying complex workflows.

- Google V3 and Cling AI often performed well across various tests, showing strong prompt adherence and visual quality.

- Moon Valley consistently underperformed, often producing nonsensical or low-quality results, making it a poor value.

- Seedance and Minimax showed promise in specific areas, offering good alternatives for certain use cases.

- Pricing varies significantly, with Runway being the most affordable and Google V3 being the most expensive for comparable generation times.

Text-to-Video Comparisons

I ran four core text to video prompts to stress different skills.

Prompt: Astronaut Drilling On An Asteroid

- Google V3: Decent drilling but weak “space” feel.

- Seedance: Better drill motion but missed the floating effect.

- Halo AI: Not impressive.

- Moon Valley: Confusing result.

- Cling 2.1: Some floating. realism lacked.

- Wondershare Filmora (one 2.2): Gravity effect there, but unnatural look.

Prompt: Alien Reading A Holographic Newspaper

- Google V3: Excellent holographic display and natural actions.

- Seedance: Floating newspaper. actions less convincing.

- Minimax: Hologram glitched.

- Moon Valley: Poor again.

- Cling 2.1: Good alien model and hybrid newspaper. weak yawn.

- Wondershare Filmora: Too fast. no real hologram.

Prompt: Anime Fighter Punch Combo

- Google V3: Weak punches. poor prop use.

- Seedance: Stronger punches. issues with the water bottle.

- Minimax: Surprisingly strong. added a fun “magic trick.”

- Moon Valley: Failed.

- Cling 2.1: Realistic sip of water. no towel action.

- Wondershare Filmora: Decent but not standout.

Prompt: Pixar-Style Squirrel On A Bike With A Giant Sandwich And Birds

- Google V3: Great prompt coverage, including birds.

- Seedance: Strong motion. birds less convincing.

- Minimax: Not quite Pixar. sandwich broken.

- Moon Valley: Again, very poor.

- Cling 2.1: Good cape and sandwich. odd camera angle.

- Wondershare Filmora: Mixed styles. too fast.

Image-to-Video Comparisons

This mode gives tighter control. I checked consistency, motion, and camera work.

Zombie Reaching Toward Camera In A Storm

- Google V3: Strong sea effects. nice jump scare.

- Wondershare Filmora: Fast, handheld vibe.

- Hicksfield: Slow-motion look. blurred hand.

- Moon Valley: Unusable. morphing face.

- Minimax: Glowing eyes and water effects.

- Seedance: Close approach. odd pirate in/out.

- Runway: No movement. only glowing eyes.

- Cling 2.1: A favorite here. active waves and struggle to stand.

Girl Leaning Out Of A Moving Train

- Google V3: Top performer. mouth stuck open.

- Wondershare Filmora: Strong speed sense. opposite train motion.

- Hicksfield: Didn’t work well.

- Moon Valley: Failed.

- Minimax: Changed scene. inconsistent character. good camera move.

- Seedance: Decent. slow-motion feel.

- Runway: Fast background. statue-like subject.

- Cling 2.1: Large scene changes. weak character consistency.

Two Viking Warriors Fighting

- Google V3: Missing axe. hits lacked realism.

- Wondershare Filmora: Some action. morphing issues.

- Hicksfield: Not great.

- Moon Valley: Scene changed entirely.

- Minimax: Movement struggled.

- Seedance: Decent. impact not visible.

- Runway: Statue-like again.

- Cling: Best for dynamic movement here, though not perfect.

Lego Barbecue Scene

- Google V3: Bird steals sausage. fun “Oh no, my sausage” moment.

- Wondershare Filmora: Bird steals people instead.

- Hicksfield: Very good. bird grabs sausage and plate.

- Moon Valley: Skipped.

- Minimax: Decent. not as strong as Hicksfield.

- Seedance: Bird flies in. no grab.

- Runway: Some motion. a button gets taken.

- Cling: Characters react to the bird—interesting touch.

Pricing and Value

Costs vary a lot. Here’s the bottom line from the tests.

What Stood Out On Cost

- Runway: Cheapest overall for image-to-video.

- Google V3: Most expensive for similar generation time.

- Moon Valley: Overpriced for its quality.

- Cling, Seedance, Minimax, Wondershare Filmora: Good value choices depending on your use case.

Comparison Snapshot

| Tool | Strengths Noted in Tests | Weak Spots Seen | Best For | Value Take |

| Google V3 | Prompt coverage. strong holograms. solid sea effects | Pricey. some weak motion/props | High-fidelity text-to-video. cinematic shots | Powerful but costly |

| Cling (2.1) | Dynamic movement. rich scenes. zombie/waves standout | Inconsistency. odd camera in some prompts | Action shots. energetic motion | Strong results for the price |

| Seedance | Good drill action. strong motion. steady results | Birds/impact not always convincing | Physical actions. medium realism | Solid mid-tier option |

| Minimax | Shockingly good punches. creative touches | Style drift. scene/character shifts | Creative variations. fun details | Good budget creativity |

| Wondershare Filmora | Fast outputs. clear motion. accessible | Often too fast. style mix. odd choices | Quick tests. speed over polish | Budget-friendly utility |

| Runway | Lowest cost. easy to run | Static subjects in tests. limited motion | Cheap experiments. drafts | Best on price, not quality |

| Hicksfield | Lego scene strong. slow-mo aesthetic | Blur/motion issues elsewhere | Specific stylized shots | Niche, situational value |

| Halo AI | Basic outcomes | Didn’t impress | Very simple prompts | Only if needed |

| Moon Valley | — | Nonsensical, morphing, poor quality | Avoid for quality work | Overpriced and weak |

| Weavy AI (hub) | Compare tools in one place. workflow control | Not a generator itself | Centralized testing and management | Saves time across tools |

Practical Tips and Checklists

Prompt and setup checklist

- Keep prompts short and specific.

- Set aspect ratio before you generate.

- Reuse the same face/image for consistency.

- Add one action at a time.

- Test 3–5 seeds to find a clean take.

Common mistakes to avoid

- Piling too many actions into one prompt.

- Mixing styles mid-prompt.

- Forgetting that the last uploaded image may set the aspect ratio.

- Judging a tool from one single clip.

Conclusion

After testing numerous AI video generators, it’s clear that some tools stand out. Google V3 and Cling AI consistently performed well across many tests. However, tools like Moon Valley are best avoided due to their poor quality and high cost. For those looking to compare results efficiently, Weavy AI is a great platform to manage these diverse tools.

Frequently Asked Questions

1. What is an image to video AI generator?

It is a model that takes a still image and a short prompt, then produces a brief animated clip with motion.

2. How do I keep characters consistent across shots?

Use the same source image. Repeat consistency phrases in your prompt. Re-crop for close-ups to preserve detail.

3. What’s the ideal clip length for social?

Aim for 3–8 seconds per shot. Then stitch several shots together for longer edits.

4. How can I get better lip sync or timed actions?

Start with a small action, then add the spoken line. Keep lines short to improve timing.

5. How do I control aspect ratio across tools?

Choose the ratio (16:9, 9:16, 1:1) before generation. If you upload multiple images, remember the last one may set the ratio.

6. Should I judge a tool from one result?

No. Try a few seeds and minor prompt tweaks. Quality varies from run to run.

7. Which tool is best for budget tests?

Runway is the cheapest in these tests. However, results may be static. For balanced value, check Minimax, Seedance, or Cling.