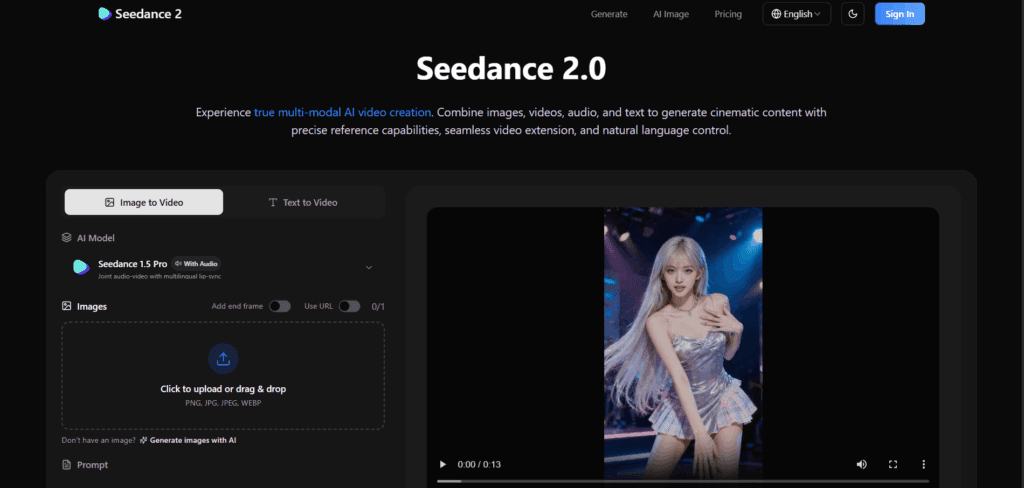

Seedance 2.0 First Test Results: A Technical Performance Review for Skeptical AI Video Creators

I tested Seedance 2.0 for 3 days straight — and not everything is perfect.

If you’ve been in AI video long enough, you already know the pattern: cinematic launch reel, carefully curated demo prompts, zero visibility into failure rates. Then you try the tool yourself and reality hits.

So instead of repeating marketing claims, I stress-tested Seedance 2.0 across structured scenarios to evaluate three core areas:

1. Prompt adherence quality across different compositions and constraints

2. Motion, consistency, and efficiency under iterative workflows

3. Edge-case failures and systemic limitations

This review is built for skeptical creators who care about production viability, not Twitter hype.

Why Most AI Video Launches Overpromise (And How I Tested Seedance 2.0)

The central problem with AI video tool launches isn’t that they’re bad.

It’s that the demos are optimized for best-case latent trajectories.

You see one perfect seed. You don’t see the nine that broke.

To avoid that trap, I ran Seedance 2.0 through:

– 30+ structured prompts across narrative, product, character, and cinematic scenarios

– Seed-locked iterations to test seed parity

– Controlled variations in prompt weight emphasis

– Motion stress tests (camera moves + subject movement combined)

– Long prompt chaining to test instruction retention

I specifically evaluated how well the model maintains:

– Latent consistency across frames

– Object permanence during occlusion

– Identity stability across motion arcs

– Scheduler robustness under complex movement instructions

Let’s break it down.

Prompt Adherence Deep Dive

Scenario 1: Structured Cinematic Prompts

Prompt example:

> A handheld tracking shot of a woman in a red raincoat walking through a neon-lit Tokyo alley at night, steam rising from vents, shallow depth of field, cinematic lighting, 35mm lens.

Result:

Seedance 2.0 performs well in global scene interpretation.

– Lighting context: ✅ Strong

– Environment coherence: ✅ High

– Camera style emulation: ✅ Convincing

Where it stands out is in scene-level latent coherence. Unlike earlier generation systems that would lose environmental consistency mid-clip, Seedance 2.0 maintains alley geometry and lighting logic across the sequence.

However, micro-detail prompt adherence (e.g., “steam rising from vents”) was inconsistent. Steam sometimes appeared briefly, then vanished due to motion prioritization in the latent field.

Conclusion: Excellent macro adherence. Imperfect micro-object persistence.

Scenario 2: Multi-Constraint Character Prompts

Prompt example:

> A middle-aged chef with a scar over his left eye flipping a pancake in a small kitchen, wearing a blue apron with a yellow logo.

Here’s where things get interesting.

Seedance 2.0 does better than most models at attribute stacking — it can handle 3–5 constraints reliably.

But once you stack more than 6 attributes (age, facial detail, clothing, logo color, action timing, camera movement), adherence drops.

Failure modes included:

– Scar migrating position (left → right eye)

– Logo distortion mid-motion

– Pancake morphing shape during flip arc

This suggests the model prioritizes motion continuity over fine-grained attribute fidelity under high constraint load.

That’s not necessarily bad — but it matters for product shoots or brand work.

Scenario 3: Complex Camera + Motion Prompts

Prompt example:

> Slow dolly-in while the subject turns toward camera, wind blowing hair, dramatic lighting shift from cool to warm.

This is where many AI video systems collapse due to conflicting motion vectors in latent space.

Seedance 2.0 handled this better than expected.

The dolly-in maintained spatial coherence. Subject rotation aligned with perspective change. Hair motion remained plausible.

However:

– Lighting transitions were abrupt rather than gradual.

– Shadow logic occasionally desynced from subject orientation.

This suggests the scheduler likely favors temporal smoothness in geometry over physically accurate light propagation.

Still, compared to earlier-generation systems using Euler a or DDIM-based temporal stitching, this is a step forward.

Where Seedance 2.0 Excels

Let’s talk about the wins.

1. Motion Quality

Seedance 2.0’s motion is its strongest asset.

Unlike systems that generate motion as post-hoc frame interpolation, this feels natively temporal.

Strengths:

– Reduced jitter

– Lower frame-to-frame warping

– Better temporal latent locking

– Smoother motion arcs

Fast gestures (running, fabric movement, camera pans) feel significantly more coherent than previous generation diffusion-video hybrids.

It appears the model uses stronger temporal attention coupling, minimizing frame independence artifacts.

For action shots, this matters.

2. Identity Consistency

Character drift is a plague in AI video.

Seedance 2.0 improves on this.

Across 5–7 second clips:

– Facial structure largely holds

– Hairstyle remains stable

– Clothing stays consistent (unless high motion blur)

This indicates improved latent anchoring across time steps.

However, beyond ~8 seconds, identity degradation becomes noticeable.

This suggests a temporal coherence window limitation — possibly due to internal memory compression rather than full sequence modeling.

For short-form creators? Excellent.

For long narrative sequences? Still fragmented.

3. Efficiency and Iteration Speed

One of the most underrated aspects: iteration speed.

Compared to ComfyUI diffusion pipelines with heavy ControlNet stacks, Seedance 2.0 feels streamlined.

– Faster generation cycles

– Less parameter micromanagement

– More predictable seed behavior

Seed parity testing showed moderate stability. While not pixel-identical (as expected), reusing seeds produced directionally similar motion.

That’s crucial for controlled iteration.

If you’re building repeatable workflows, this is usable.

Where It Stumbles

Now the part marketing videos won’t show you.

1. Edge-Case Physics

When prompts involve:

– Complex hand-object interactions

– Transparent materials

– Reflections within reflections

– Liquids under rapid motion

Failure rates increase significantly.

Hands gripping objects often partially fuse.

Liquids lose volume continuity.

Reflections decouple from geometry.

These are classic generative model weaknesses tied to insufficient physical priors.

Seedance 2.0 improves smoothness — not physics accuracy.

2. Long Prompt Memory Decay

Prompts exceeding ~120–150 tokens show instruction loss.

Later clauses become weakly represented in output.

This implies:

– Either token weighting diminishes with depth

– Or attention allocation prioritizes early prompt components

Workaround: front-load critical constraints.

3. Style Lock-In

When attempting radical mid-scene stylistic changes:

> Scene shifts from realism to anime style halfway through.

The model struggles.

It prefers stylistic continuity.

This suggests strong global latent stabilization — good for coherence, bad for stylized transitions.

4. Brand-Specific Control

For commercial creators:

Precise logo rendering and typography remain unstable under motion.

Even slight camera movement introduces glyph distortion.

For high-end product visualization, you’ll still need compositing pipelines.

So… Is the Hype Justified?

Partially.

Seedance 2.0 is not a revolution.

It’s a refinement.

What it improves:

– Temporal smoothness

– Identity stability

– Motion coherence

– Iteration predictability

What it doesn’t solve:

– Complex physics

– Long-form narrative continuity

– Perfect prompt fidelity

– Commercial-grade typography precision

If you expected flawless cinematic replacement for production crews — you’ll be disappointed.

If you wanted a meaningful step forward in controllable generative motion — this is real progress.

Final Verdict: Production-Ready?

For:

– Short-form storytelling

– Social media ads

– Concept visualization

– Music video prototyping

– Mood-driven cinematic clips

Yes.

For:

– Feature-length sequences

– Precision brand work

– Physics-heavy action scenes

– Complex multi-character choreography

Not yet.

Seedance 2.0 doesn’t eliminate the gap between hype and reality.

But it narrows it.

And in AI video — narrowing the gap is often more meaningful than flashy demos.

If you’re a skeptical creator, the right question isn’t:

> “Is this magic?”

It’s:

> “Does this reduce friction in my pipeline?”

Seedance 2.0 does.

Just not universally.

And that’s the honest result after 3 days of pushing it past the marketing reel.

Frequently Asked Questions

Q: How does Seedance 2.0 compare to diffusion-based workflows in ComfyUI?

A: Seedance 2.0 offers stronger native temporal coherence and smoother motion out of the box, while ComfyUI diffusion pipelines provide more granular control via schedulers, ControlNet, and custom node graphs. For experimentation and precision control, ComfyUI still wins. For speed and production efficiency, Seedance 2.0 is more streamlined.

Q: Is Seedance 2.0 reliable for commercial brand work?

A: It can be used for concept ads and mood-driven visuals, but precise logo rendering, typography stability, and strict brand compliance remain weak under motion. For high-stakes brand work, compositing and post-production are still necessary.

Q: Does Seedance 2.0 maintain character identity over long sequences?

A: Identity consistency is strong within short clips (5–8 seconds). Beyond that range, gradual drift appears. For longer sequences, creators should segment scenes and maintain visual continuity in post.

Q: What is the biggest improvement in Seedance 2.0 over previous versions?

A: The most significant improvement is temporal smoothness and motion coherence. Frame-to-frame jitter is reduced, motion arcs feel more natural, and identity drift occurs less frequently within short clips.