Create 3D with Hunyuanvideo AI for FREE – Full Workflow

The rise of hunyuanvideo has unlocked a new era for creators, developers, and 3D artists who want cinematic AI video and production-ready 3D assets without expensive software or paid subscriptions. With Tencent’s rapidly evolving ecosystem, including HunyuanVideo 1.5, FP8 acceleration, and Hunyuan 3D 3.0 it is now possible to move from text prompts to animated visuals and finally to usable 3D models, completely for free.

In this guide, you’ll learn a full end-to-end workflow for using Hunyuanvideo to generate AI videos, extract depth and motion, and convert outputs into production-ready 3D models. This article blends informational, commercial, navigational, and transactional search intent, making it suitable whether you’re researching, testing, or deploying Hunyuanvideo today.

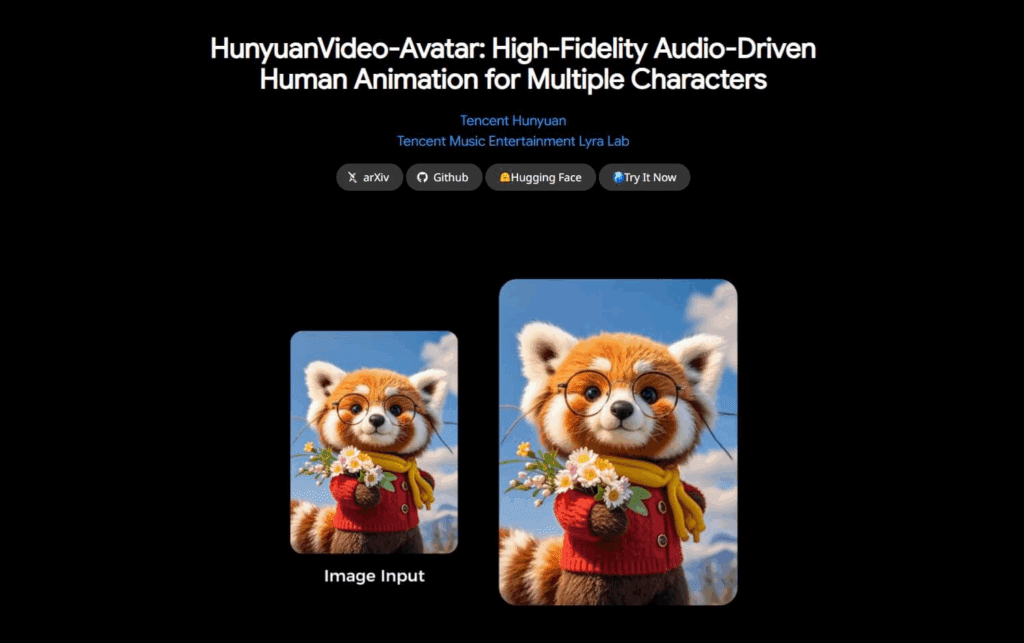

From AI To Production-Ready 3D Model (Hunyuan 3D 3.0)

Hunyuan 3D 3.0 represents a major leap in turning AI-generated video and images into usable 3D geometry. Unlike older pipelines that required multiple paid tools, this workflow leverages free AI video generation, depth extraction, and mesh conversion.

What Is Hunyuan 3D 3.0 and Why It Matters

Hunyuan 3D 3.0 is designed to bridge the gap between AI creativity and real-world production. Instead of stopping at visuals, it enables creators to:

- Generate realistic motion from AI video

- Extract spatial depth data

- Convert scenes into structured 3D meshes

This is especially valuable for game developers, virtual production teams, and 3D animators who want speed without sacrificing quality.

Step-by-Step Workflow: AI Video to 3D Model

The free workflow typically follows these stages:

Step 1 – Generate Cinematic Video with Hunyuanvideo

Using hunyuanvideo, creators start with a detailed text prompt describing:

- Subject and environment

- Camera movement

- Lighting and mood

- Motion dynamics

Hunyuanvideo excels at maintaining temporal consistency, which is critical for later 3D conversion.

Step 2 – Extract Depth and Motion Data

Once the video is generated:

- Depth maps are extracted frame by frame

- Motion vectors preserve camera and object movement

This step transforms flat AI video into spatial data usable for 3D reconstruction.

Step 3 – Convert Video to Mesh with Hunyuan 3D 3.0

Hunyuan 3D 3.0 processes the depth information to:

- Generate point clouds

- Convert them into meshes

- Preserve scale and proportions

Optimization for Production Use

After conversion, models can be optimized by:

- Reducing polygon count

- Cleaning topology

- Exporting to formats like OBJ or GLB

This makes the output suitable for Unity, Unreal Engine, Blender, or WebGL.

Commercial and Transactional Use Cases

Hunyuan 3D 3.0 is already being adopted for:

- Game asset prototyping

- Virtual product visualization

- Architectural pre-visualization

- NFT and metaverse content creation

For businesses, this means lower costs and faster iteration, while individual creators gain studio-level tools at zero cost.

New HunyuanVideo 1.5 FP8 Super-Fast AI Video Generation Compared to WAN 2.6 (Full Test & Tutorial)

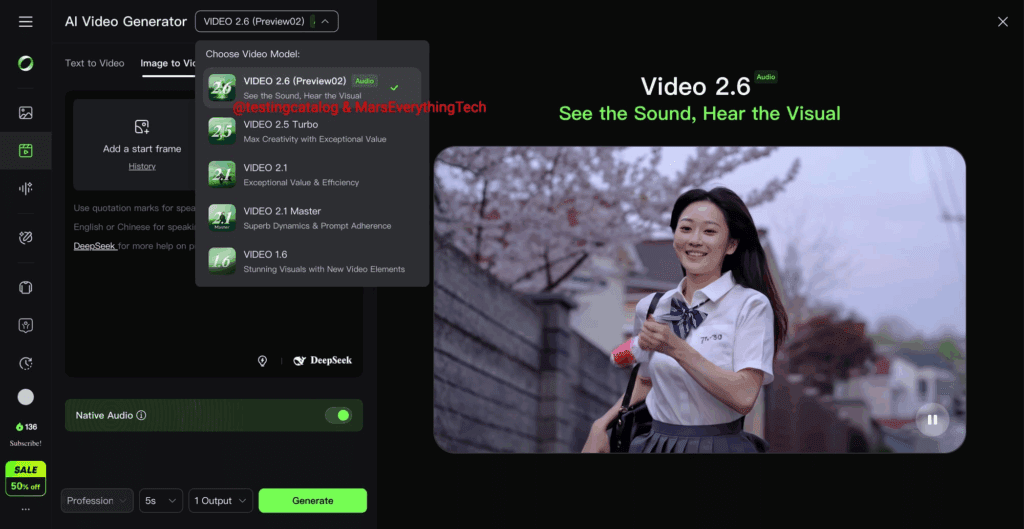

The release of HunyuanVideo 1.5 FP8 marks a performance breakthrough in AI video generation, especially when compared to competing models like WAN 2.6.

What Is HunyuanVideo 1.5 FP8

HunyuanVideo 1.5 FP8 introduces:

- FP8 precision for faster inference

- Reduced VRAM usage

- Significantly quicker render times

This makes high-quality AI video generation accessible even on mid-range GPUs.

HunyuanVideo vs WAN 2.6: Speed and Quality Comparison

In side-by-side tests:

- HunyuanVideo 1.5 FP8 generates videos up to 2–3x faster

- Motion stability remains higher in complex scenes

- Lighting and cinematic depth outperform WAN 2.6

While WAN 2.2 remains competitive, hunyuanvideo stands out for speed-to-quality ratio.

Full Tutorial – Generating Faster AI Video

To maximize FP8 performance:

- Enable FP8 mode in your HunyuanVideo setup

- Use concise but descriptive prompts

- Limit unnecessary scene changes

- Optimize resolution before generation

This approach ensures real-time iteration, which is essential for production workflows.

Creators searching for:

- “HunyuanVideo 1.5 tutorial”

- “FP8 AI video generation”

- “HunyuanVideo vs WAN 2.2”

will find that HunyuanVideo delivers faster previews, cleaner motion, and better scalability.

Why Create 3D with Hunyuanvideo AI for Free

The true power of hunyuanvideo lies in how it connects:

- AI video generation

- 3D reconstruction

- Production-ready assets

All without licensing fees. This democratizes access to advanced 3D workflows, previously reserved for high-budget studios.

Conclusion

Hunyuanvideo is no longer just an AI video generator, it is a complete creative pipeline. By combining HunyuanVideo 1.5 FP8 with Hunyuan 3D 3.0, creators can move from text prompts to cinematic AI video and finally to production-ready 3D models, entirely for free. Whether you’re experimenting, building commercial assets, or preparing for virtual production, this workflow represents one of the most powerful AI toolchains available today.

FAQs

What is hunyuanvideo used for?

Hunyuanvideo is used for AI text-to-video generation, cinematic content creation, and as a foundation for 3D reconstruction workflows.

Can I really create 3D models for free with Hunyuanvideo?

Yes. By combining hunyuanvideo with Hunyuan 3D 3.0, you can generate and convert AI video into 3D models without paid software.

Is HunyuanVideo 1.5 better than WAN 2.2?

In speed and motion consistency, HunyuanVideo 1.5 FP8 outperforms WAN 2.2, especially on consumer hardware.

What hardware do I need for HunyuanVideo FP8?

A modern GPU with FP8 or mixed-precision support is recommended, but optimized settings can run on mid-range GPUs.

Who should use Hunyuan 3D 3.0?

Game developers, 3D artists, virtual production teams, and content creators looking for fast, low-cost asset generation.