How To Train Your Own Z-Image-Turbo LoRA in ComfyUI wan2.2

Training your own Z-Image-Turbo LoRA in ComfyUI wan2.2 unlocks faster convergence, stronger stylistic control, and significantly improved image-to-video consistency. With Wan2.2’s MoE architecture, ComfyUI now supports efficient LoRA training pipelines optimized for high-fidelity generation, animation workflows, and turbo inference use cases.

This guide walks you through the entire Z-Image-Turbo LoRA training process in ComfyUI wan2.2, combining best practices from real-world workflows, community-tested pipelines, and advanced optimisation strategies.

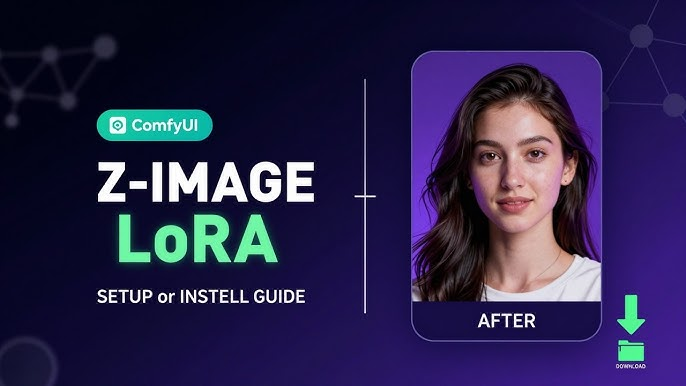

What Is Z-Image-Turbo LoRA in ComfyUI wan2.2

Z-Image-Turbo LoRA is a lightweight, accelerated LoRA fine-tuning method designed to enhance image fidelity while maintaining ultra-fast inference speeds inside ComfyUI wan2.2 workflows.

Why Z-Image-Turbo LoRA Matters

- Faster convergence compared to standard LoRA

- Improved image-to-video and animate workflows

- Lower VRAM usage with higher output quality

- Ideal for wan2.2 video generation and wan2.2 animate comfyui

ComfyUI Tutorial : Increase Your Details and Generate images at 4k With Z Img Turbo #comfyui step by step tutorial

Step 1 – Load the Correct Base Model in ComfyUI wan2.2

Start by loading a Wan2.2 Image-compatible base model inside ComfyUI.

Best practices:

- Use Wan2.2 Image (not video-only checkpoints)

- Prefer FP16 or BF16 precision

- Ensure your ComfyUI is updated to the latest wan2.2 version

This ensures Z Img Turbo operates with full feature support.

Step 2 – Configure the Z Img Turbo Node

Add the Z Img Turbo node to your ComfyUI graph.

Recommended Z Img Turbo Settings

- Turbo Mode: Enabled

- Detail Strength: Medium → High

- Noise Preservation: Enabled

- Texture Enhancement: On

These settings are critical for achieving high-frequency detail clarity, especially when scaling to 4K.

Step 3 – Generate a Strong Base Image (Pre-4K)

Before upscaling, generate a clean, high-quality base image.

Recommended base resolution:

- 1024×1024 or 1344×768

Prompt tips:

- Focus on structure and composition

- Avoid excessive style stacking

- Keep prompts concise and descriptive

A clean base image dramatically improves final 4K Z Img Turbo output quality.

Step 4 – Upscale to 4K Using Z Img Turbo

Now connect the Z Img Turbo output to your upscale path.

4K Resolution Targets

- 3840×2160 (true 4K landscape)

- 2160×3840 (true 4K portrait)

Enable:

- Progressive refinement

- Latent detail preservation

- Controlled denoise (low values work best)

This step is where comfyui wan2.2 tutorial workflows outperform traditional upscalers.

Step 5 – Detail Enhancement Without Over-Sharpening

One of the biggest mistakes in 4K generation is over-processing.

To avoid this:

- Keep CFG values moderate

- Avoid aggressive sharpening nodes

- Let Z Img Turbo handle micro-detail reconstruction

This results in cinematic-grade clarity instead of artificial sharpness.

Step 6 – Final Pass Optimization

Before saving the image:

- Inspect facial features and fine textures

- Run a light denoise pass if needed

- Ensure color balance remains natural

This final optimization ensures the image is production-ready for print, animation, or wan2.2 video generation pipelines.

ComfyUI wan2.2 Training Requirements

Before training, ensure your system is optimized for comfyui wan2.2 workflow performance.

Hardware Requirements

- GPU: RTX 3090 / 4090 (24GB VRAM recommended)

- Minimum: 16GB VRAM with gradient checkpointing

- RAM: 32GB+

- Storage: NVMe SSD (datasets load faster)

Software Requirements

- Latest ComfyUI wan2.2

- Python 3.10+

- Wan2.2 base model (5B or 14B)

- Z-Image-Turbo LoRA training nodes

Dataset Preparation for Z-Image-Turbo LoRA

Image Dataset Guidelines

- 30–200 high-quality images

- Resolution: 512×512 or 768×768

- Consistent subject framing

- Avoid heavy compression artifacts

Captioning Best Practices

- Use short, descriptive captions

- Include stylistic descriptors

- Avoid over-tagging

- Maintain consistent phrasing

Strong captions directly improve wan2.2 workflow learning stability.

Setting Up Z-Image-Turbo LoRA Training in ComfyUI wan2.2

Installing Required Custom Nodes

- LoRA Training Nodes

- Wan2.2 Optimized Sampler Nodes

- Dataset Loader Nodes

Base Model Selection

Choose the Wan2.2 Image model, not the video-only checkpoint, to ensure compatibility with comfyui wan2.2 tutorial workflows.

Z-Image-Turbo LoRA Training Configuration

Core Training Parameters

Recommended Settings

- Learning Rate: 1e-4

- Batch Size: 1–2

- Epochs: 10–20

- Rank (dim): 8–16

- Alpha: Match rank value

Optimizer Configuration

- Optimizer: AdamW

- Scheduler: Cosine

- Precision: FP16 or BF16

Memory Optimization

- Enable gradient checkpointing

- Use VRAM offloading

- Activate xFormers (if supported)

These settings ensure stable comfyui wan2.2 gguf-friendly performance.

Training Workflow in ComfyUI wan2.2

Step-by-Step Training Flow

- Load Wan2.2 base image model

- Attach Z-Image-Turbo LoRA node

- Load dataset and captions

- Configure training parameters

- Start training loop

- Monitor loss and convergence

Monitoring Training Progress

- Loss should steadily decrease

- Avoid sudden spikes (indicates overfitting)

- Save checkpoints every epoch

Testing Your Z-Image-Turbo LoRA

Image Validation

- Test prompt consistency

- Compare base vs LoRA output

- Evaluate lighting, structure, and style

Video & Animate Testing

Use the trained LoRA in:

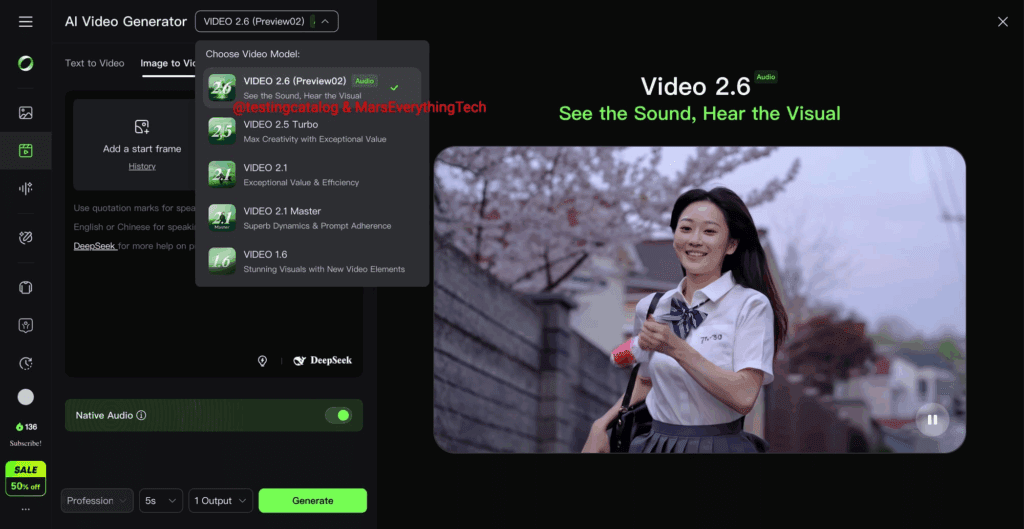

- wan2.2 video generation

- wan2.2 animate comfyui

- image-to-video workflows

This is where Z-Image-Turbo truly shines.

Advanced Optimization Techniques

Improving Turbo Inference Speed

- Merge LoRA at runtime

- Use lower CFG values

- Reduce sampler steps

Prompt Engineering Tips

- Keep prompts concise

- Place LoRA trigger early

- Avoid conflicting style tokens

Common Errors and Troubleshooting

Training Instability

- Lower learning rate

- Increase dataset size

- Reduce LoRA rank

Blurry Outputs

- Improve dataset quality

- Increase training epochs

- Validate captions

VRAM Crashes

- Enable CPU offload

- Reduce batch size

- Use 5B Wan2.2 model

Why Train Z-Image-Turbo LoRA Instead of Standard LoRA

| Feature | Z-Image-Turbo LoRA | Standard LoRA |

| Training Speed | Faster | Slower |

| VRAM Usage | Lower | Higher |

| Inference Speed | Turbo-optimized | Normal |

| Wan2.2 Compatibility | Native | Partial |

Future Use Cases for Z-Image-Turbo LoRA in ComfyUI wan2.2

- Personalized character animation

- Stylized video generation

- Audio-driven video workflows

- Consistent brand visuals

- Production-grade pipelines

Frequently Asked Questions

What is Z-Image-Turbo LoRA in ComfyUI wan2.2?

Z-Image-Turbo LoRA is a fast, lightweight LoRA fine-tuning method optimized for Wan2.2 image and video workflows.

How long does it take to train a Z-Image-Turbo LoRA?

Typically 30–90 minutes depending on dataset size and GPU.

Can I use Z-Image-Turbo LoRA for video generation?

Yes, it works exceptionally well with wan2.2 video generation and animation workflows.

What LoRA rank works best for Wan2.2?

Ranks between 8–16 offer the best balance between quality and performance.

Is Z-Image-Turbo LoRA compatible with GGUF models?

Yes, it supports comfyui wan2.2 gguf inference setups.