Complete UGC Ad Workflow: From Product Photo to High-Converting AI Video (Beginner-Friendly Technical Guide)

Here’s the exact UGC ad workflow I use to create UGC ad start to finish:

From a single product photo to a polished, scroll-stopping AI-generated UGC ad — without bouncing randomly between tools or breaking visual consistency halfway through.

If you’ve ever felt like your AI ad process is chaotic — one tool for images, another for video, another for voice, nothing matching — this guide fixes that. We’re building a connected, end-to-end system designed specifically for beginners who want clarity and repeatability.

1. The AI UGC Tool Stack: How the End-to-End System Connects

The biggest problem in AI ad creation isn’t creativity.

It’s fragmentation.

So here’s the clean stack I recommend and how each component connects:

Core Workflow Stack

1. Product Image → ComfyUI (Stable Diffusion / SDXL)

Used for product enhancement, scene generation, and controlled variations.

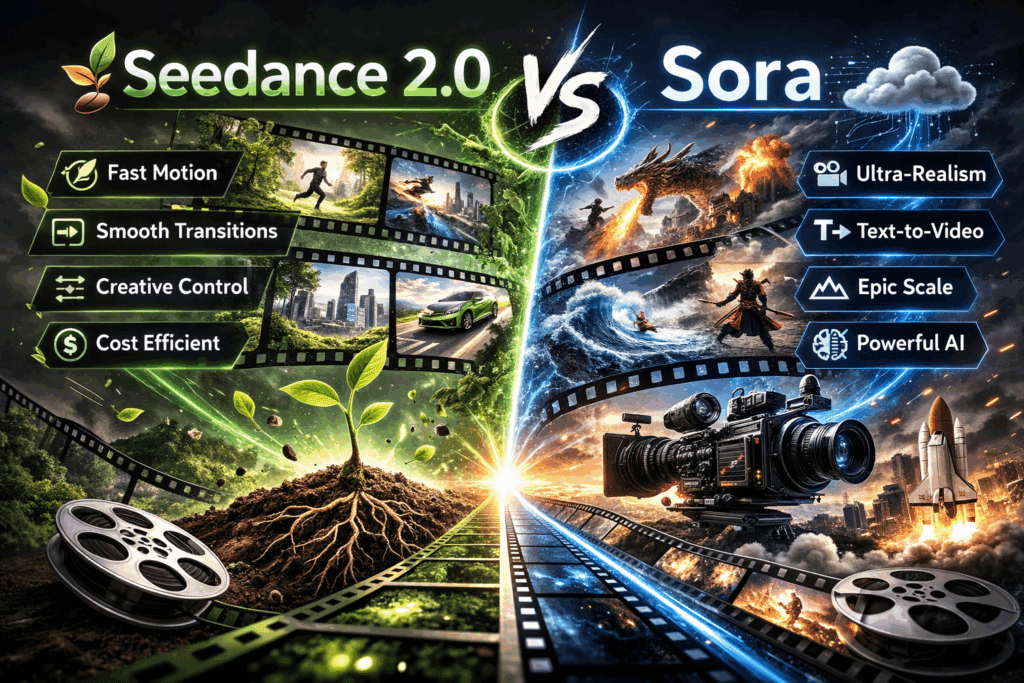

2. Image-to-Video → Runway Gen-3 / Kling / Sora

Used for motion generation, camera movement, and realism.

3. Avatar or UGC Simulation → Runway or Kling

Optional: AI spokesperson or lifestyle simulation.

4. Voiceover → ElevenLabs / Play.ht

Natural-sounding UGC-style voice.

5. Editing & Assembly → CapCut / Premiere Pro

Final pacing, captions, hooks, and export.

Why This System Works

This stack works because it maintains:

- Latent consistency (your product doesn’t morph between shots)

- Seed parity (repeatable visual outputs)

- Style continuity across frames

- Structured handoff between tools

Instead of prompting randomly, we treat this like a pipeline.

Image → Latent refinement → Motion synthesis → Audio sync → Edit → Export.

That’s your visual engine.

2. Step-by-Step: From Product Shot to Polished UGC Ad Export

Let’s break this into a real production roadmap.

Step 1: Clean and Enhance the Product Photo (ComfyUI)

Start with your raw product image.

Inside ComfyUI (SDXL):

A. Background Cleanup

- Use segmentation or remove background.

- Replace with a neutral studio backdrop.

B. Lighting Normalization

- Use a relighting node or prompt-based correction.

- Avoid harsh shadows — they break during animation.

C. Lock the Product Identity

This is critical.

Use:

- Fixed seed value

- Same checkpoint model

- Controlled denoise strength (0.25–0.45 for refinements)

Why?

Because high denoise (>0.6) alters structure and kills product consistency.

You want detail enhancement — not redesign.

Step 2: Generate Lifestyle Variations (Controlled Scene Expansion)

Now we place the product in context.

Use:

- SDXL with ControlNet (Depth or OpenPose if needed)

- Low CFG (4–6) for realism

- Euler a scheduler for natural texture transitions

Why Euler a?

It introduces slightly organic noise distribution that feels less “plastic” than DPM++ in lifestyle scenes.

You’re creating:

- Bathroom counter scene

- Bedroom nightstand scene

- Car interior scene

Each scene uses:

- Same product seed

- Similar lighting temperature

- Consistent lens description (e.g., 35mm cinematic)

This preserves cross-scene brand consistency.

Export as high-resolution PNG frames.

Step 3: Animate the Scene (Runway / Kling / Sora)

Now we introduce motion.

Upload your still image to:

- Runway Gen-3 (most accessible)

- Kling (strong physics realism)

- Sora (when available)

Prompt Structure for Image-to-Video

Use this structure:

“Handheld UGC-style video of a woman holding this product in a softly lit bathroom, slight natural camera sway, shallow depth of field, realistic skin texture, subtle movement, no distortion.”

Key parameters to control:

- Motion intensity: Low to medium

- Camera movement: Subtle handheld

- Duration: 4–6 seconds

Avoid:

- Extreme motion

- Rapid zoom

- Fast pans

Why?

Because image-to-video models struggle with temporal coherence when motion amplitude is high.

That’s how products start melting.

Step 4: Maintain Temporal Consistency

This is where most beginners fail.

AI video models can lose structure across frames.

To reduce drift:

- Use shorter clips (4–6 sec)

- Avoid drastic pose shifts

- Keep object center-frame

- Avoid occlusion of product

If your tool allows:

- Lower motion guidance strength

- Increase structure preservation

Think of it as protecting the latent representation across time.

Step 5: Generate UGC Voiceover

Use ElevenLabs.

Script structure:

Hook (0–3 sec)

Problem (3–7 sec)

Solution (7–15 sec)

CTA (15–20 sec)

Example:

“I did not expect this to work… but this completely fixed my morning routine.”

Keep tone:

- Conversational

- Slight imperfections

- Natural pacing

Avoid overly polished ad voice.

You want believable UGC.

Step 6: Edit for Retention (CapCut or Premiere)

Now we assemble.

Editing Checklist

- Cut every 2–3 seconds

- Add dynamic captions

- Add micro zooms (105–110%)

- Insert subtle whoosh transitions

- Add background music under -28 LUFS

Retention trick:

Add pattern interrupts every 5 seconds:

- Angle change

- Caption style shift

- Sound effect

AI video gives you visuals.

Editing gives you conversions.

Step 7: Export Settings for Ads

For Meta / TikTok:

- 1080×1920 (9:16)

- H.264

- High bitrate (15–20 Mbps)

- AAC audio 320kbps

Do NOT let platforms over-compress low bitrate footage.

Low bitrate destroys AI-generated micro-texture and makes it look fake.

3. Workflow Killers: Common Mistakes and How to Prevent Them

Let’s eliminate the biggest issues beginners face.

Mistake 1: Changing Seeds Between Shots

Result:

Product subtly morphs.

Logo shifts.

Edges change.

Fix:

Document your seed values.

Treat them like brand assets.

Mistake 2: Overusing High CFG Scale

High CFG (>9) creates:

- Over-sharpening

- Plastic skin

- Unreal lighting

Keep CFG moderate (4–7) for realism.

Mistake 3: Excessive Motion in Image-to-Video

Too much camera movement causes:

- Object warping

- Hand distortion

- Background flicker

Keep motion subtle.

UGC works because it feels natural — not cinematic.

Mistake 4: Ignoring Latent Consistency

If you heavily edit the image before animation:

- Change lighting drastically

- Add heavy stylization

The video model struggles to maintain structure.

Keep source frames clean and realistic.

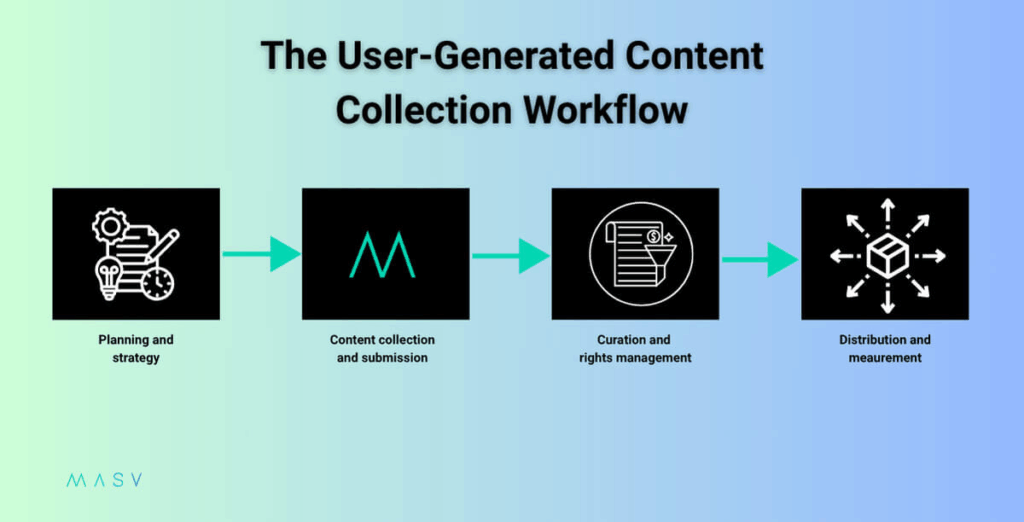

Mistake 5: No Defined Workflow Order

Random order causes:

- Re-render loops

- Lost assets

- Style inconsistency

Correct order is:

- Product cleanup

- Scene generation

- Motion generation

- Voiceover

- Edit

- Export

Never animate before locking visuals.

The Big Picture

AI UGC ads aren’t about one magical tool.

They’re about:

- Controlled generation

- Latent stability

- Temporal coherence

- Structured editing

When you connect ComfyUI → Runway/Kling → Voice → Editor in a deliberate pipeline, you eliminate chaos.

You stop guessing.

You start producing consistently.

And that’s when AI ad production becomes scalable.

Not random.

Repeatable.

If you follow this exact workflow, you can go from a single product photo to a finished, high-converting UGC-style ad — without fragmentation, without morphing products, and without wasting hours fixing broken renders.

Frequently Asked Questions

Q: Why is seed parity important in AI UGC ad creation?

A: Seed parity ensures that your product maintains structural consistency across multiple generations. Changing seeds between shots can subtly alter shape, logo placement, or texture, which breaks brand continuity in video ads.

Q: Which scheduler is best for lifestyle product scenes in SDXL?

A: Euler a is often preferred for lifestyle scenes because it produces more organic noise distribution and natural textures compared to sharper schedulers like DPM++ that can create overly polished results.

Q: How do I prevent product warping in image-to-video tools like Runway or Kling?

A: Keep motion intensity low, use short clip durations (4–6 seconds), avoid extreme camera movements, and maintain the product near the center frame. Excessive motion increases temporal drift and structural distortion.

Q: Can beginners use this workflow without coding knowledge?

A: Yes. While ComfyUI offers advanced control, beginners can use prebuilt workflows and templates. The key is understanding the order of operations and maintaining consistency between steps.