AI Video Course: Complete Text-to-Video & Image-to-Video Mastery with Runway, Sora, Kling, and ComfyUI

Master AI video creation with this completely free AI video course, designed to take you from zero knowledge to advanced, production-ready AI video workflows using the most powerful generative tools available today.

This course is built for aspiring AI video creators who want a systematic, end-to-end understanding of text-to-video and image-to-video generation. Instead of fragmented tutorials, you will learn how modern video diffusion models work, how to control motion and style, and how to deploy AI-generated videos into scalable content systems like faceless YouTube channels.

Text-to-Video AI Fundamentals: From Prompt Theory to Temporal Coherence

Text-to-video AI is built on diffusion models extended across the time dimension. Unlike image generation, where a single latent space is denoised once, video models must maintain temporal coherence across dozens or hundreds of frames. Understanding this difference is critical to mastering results in tools like Runway Gen-3, Sora, Kling, and ComfyUI-based video pipelines.

How Text-to-Video Models Actually Work

At the core, text-to-video models encode your prompt into a semantic embedding using a large language-vision model. This embedding conditions a latent diffusion process that unfolds over time. Each frame is not generated independently; instead, frames share latent information to preserve motion continuity, lighting consistency, and subject identity.

Key technical concepts you must master:

– Latent Consistency: Ensures that objects remain stable across frames. Poor latent consistency causes flickering, morphing faces, or drifting environments.

– Seed Parity: Using the same seed across generations allows reproducibility. In ComfyUI, seed parity enables controlled iteration on motion without changing composition.

– Schedulers (Euler, Euler a, DPM++): Schedulers determine how noise is removed. Euler a is favored for dynamic motion and cinematic feel, while DPM++ can produce cleaner but sometimes stiffer results.

Prompt Engineering for Video (Not Images)

Video prompts require temporal logic. Instead of describing a static scene, you describe change over time.

Bad prompt:

> “A futuristic city at night.”

Optimized video prompt:

> “A slow cinematic dolly forward through a futuristic neon city at night, rain reflections on the street, volumetric fog, soft camera shake, shallow depth of field.”

Notice how camera motion, pacing, and atmosphere are explicitly defined. In Runway and Sora, adding camera language (pan, tilt, dolly, tracking shot) dramatically improves realism.

Tool-Specific Text-to-Video Strategies

Runway Gen-3

– Best for fast ideation and cinematic motion

– Strong camera control and style presets

– Use short, dense prompts with clear motion verbs

Sora

– Exceptional world coherence and physics

– Handles long-form prompts better

– Ideal for narrative storytelling and complex scenes

Kling

– Strong character motion and anime-style results

– Benefits from explicit action verbs and frame pacing

ComfyUI

– Maximum control via nodes

– Enables custom schedulers, CFG tuning, and latent upscaling

– Ideal for advanced creators who want deterministic outputs

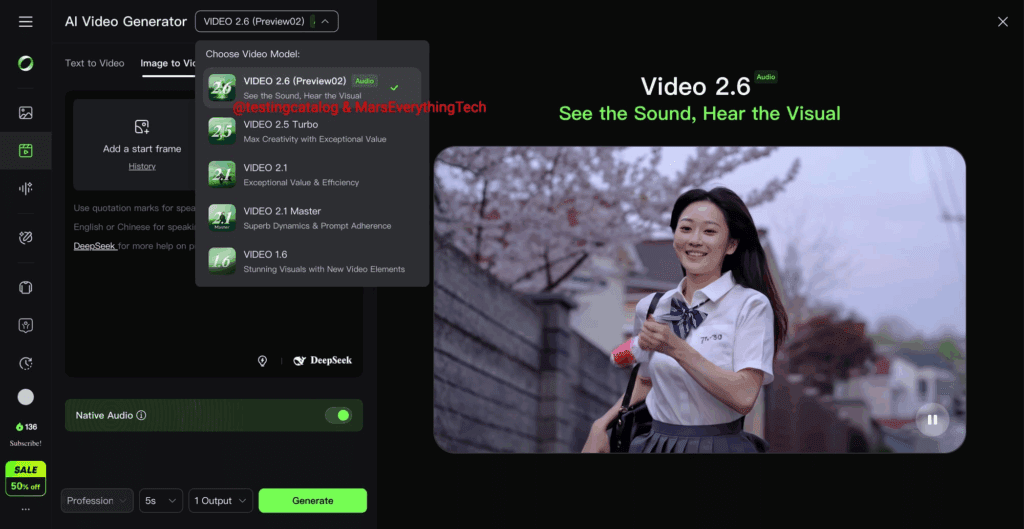

Image-to-Video Pipelines: Animation, Motion Control, and Style Consistency

Image-to-video is where most creators unlock professional-grade results. Instead of relying on the model to invent everything, you anchor generation to a keyframe image, drastically improving subject consistency.

Core Image-to-Video Workflow

1. Generate or import a high-quality image (Midjourney, Stable Diffusion, DALL·E, or Flux)

2. Feed the image into a video model (Runway, Kling, or ComfyUI)

3. Define motion, camera behavior, and duration

4. Refine with seed locking and motion strength

This approach is especially powerful for branding, character-driven content, and faceless channels.

Motion Strength and Camera Control

One of the most misunderstood parameters is motion strength.

– Low motion strength (0.2–0.4): Subtle parallax, ideal for cinematic B-roll

– Medium motion strength (0.5–0.7): Natural character movement

– High motion strength (0.8+): Abstract or stylized animation

In ComfyUI, motion control can be refined using optical flow nodes, frame interpolation, and latent blending to prevent distortion.

Maintaining Style Consistency

Style drift is a common failure point. To solve this:

– Use reference images alongside prompts

– Lock seeds when iterating

– Keep CFG values stable (typically 5–8 for video)

– Avoid over-describing conflicting styles

Runway’s image-to-video excels at preserving lighting and composition, while Kling performs well with character poses and anime aesthetics.

Advanced Image-to-Video Techniques

– Depth-based animation: Animate foreground and background at different speeds for cinematic depth

– Loop generation: Create seamless loops for shorts and background visuals

– Latent upscaling: Improve resolution before final render

These techniques allow you to produce videos that rival traditional motion graphics workflows.

Building a Faceless YouTube Channel with AI-Generated Videos

Once you master text-to-video and image-to-video, the next step is deployment. Faceless YouTube channels are one of the most scalable applications of AI video generation.

Choosing the Right Content Format

High-performing faceless formats include:

– Motivational storytelling

– AI history and future tech explainers

– Relaxing cinematic visuals with narration

– Short-form inspirational reels

AI video works best when visuals support narration rather than replace it.

End-to-End Faceless Workflow

1. Scriptwriting: Use structured prompts or LLMs for concise narration

2. Scene Planning: Break scripts into visual beats

3. Video Generation:

– Text-to-video for abstract concepts

– Image-to-video for consistent scenes

4. Voiceover: Use AI TTS with natural prosody

5. Editing: Assemble in Premiere Pro, CapCut, or DaVinci Resolve

Scaling with Automation

Advanced creators automate:

– Batch prompt generation

– Seed-based visual consistency

– Template-driven ComfyUI workflows

By reusing visual motifs and motion presets, you can produce daily uploads with minimal manual effort.

Monetization and Growth

Faceless AI channels monetize through:

– Ad revenue

– Affiliate links

– Digital products

– Course funnels

Consistency and niche focus matter more than hyper-realism. Clean visuals, coherent motion, and strong storytelling win every time.

Final Thoughts: From Beginner to AI Video Architect

This course is not about chasing viral tricks; it’s about building durable skills in AI video generation. By understanding diffusion fundamentals, mastering text-to-video and image-to-video pipelines, and applying them to real-world platforms like YouTube, you position yourself at the forefront of generative media.

AI video is not replacing creativity; it is amplifying it. With the right systems, prompts, and tools, you can produce cinematic, scalable content without cameras, actors, or studios.

Frequently Asked Questions

Q: Do I need prior video editing experience to start this AI video course?

A: No. The course starts from fundamentals and gradually introduces advanced concepts. Basic familiarity with prompts and timelines helps, but everything is explained from first principles.

Q: Which tool should beginners focus on first: Runway, Sora, Kling, or ComfyUI?

A: Beginners should start with Runway for ease of use and fast feedback. As you gain confidence, ComfyUI offers deeper control, while Sora and Kling excel at high-coherence cinematic and character-driven videos.

Q: How important are seeds and schedulers in AI video generation?

A: They are critical. Seed parity allows reproducibility, while schedulers like Euler directly affect motion quality and temporal smoothness.

Q: Can AI-generated videos be monetized on YouTube?

A: Yes. Many faceless channels successfully monetize AI videos through ads, affiliates, and digital products, as long as content is original and provides value.