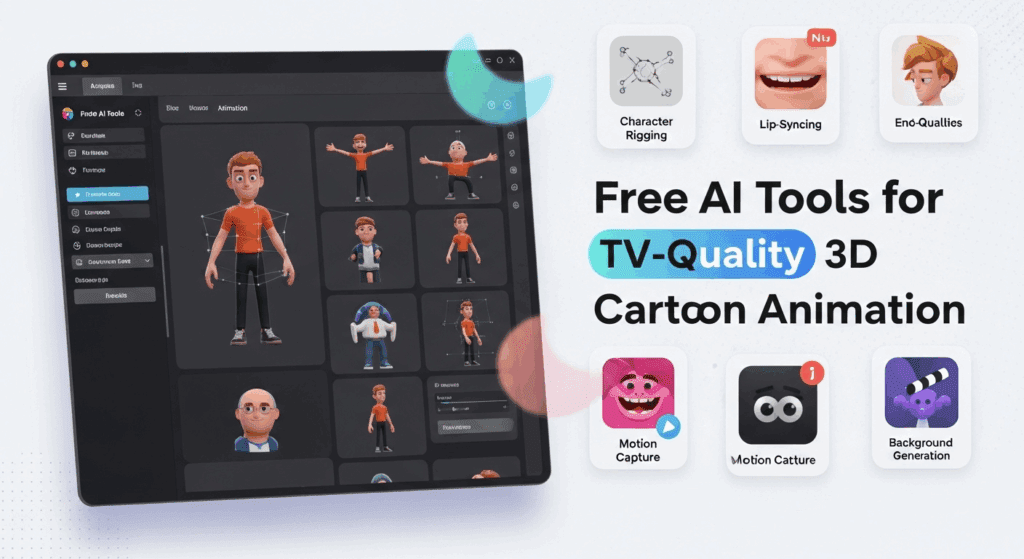

18+ Free AI Tools for TV-Quality 3D Cartoon Animation: A Zero-3D Software Workflow

Create high-quality cartoon animation without any 3D software or animation skills. This free AI workflow delivers TV-level 3D cartoon animation using simple tools that replace Blender, Maya, and traditional pipelines entirely.

The system relies on AI video generation, latent consistency control, and automated lip-sync to remove the hardest parts of animation:

- No paid tools required

- Skip rigging characters

- Keyframes not part of the process

- No prior animation skills needed

This guide walks you step-by-step from script to final animated episode using only free tools and proven techniques.

18+ Free AI Tools for TV-Quality 3D Cartoon Animation

This workflow uses over 18 free AI tools to replace every part of the traditional animation pipeline. No Blender, Maya or Keyframes. Each tool solves a specific problem from writing scripts to syncing lips with zero cost and zero animation background needed.

Below is a breakdown of all the free tools involved and exactly how they fit into the process:

1. ChatGPT (Free tier)

Use it to generate structured scripts, dialogue, and scene breakdowns. You’ll need scene-aware formatting so AI tools understand transitions.

2. Google Docs

Organize your scripts and label each scene clearly. This keeps the entire project aligned from start to finish.

3. Stable Diffusion (via Automatic1111 or ComfyUI)

Turn text prompts into stylized 3D cartoon characters. You don’t need to model anything—just prompt and adjust.

4. Cartoon Checkpoint (Open-source)

A custom model trained to generate characters with 3D-style shading and proportions. Ideal for Pixar-style outputs.

5. LoRA Training (via Kohya GUI)

Lock a character’s identity by training LoRAs on your image set. This keeps their face and style consistent across episodes.

6. Runway Gen-3

Generate full animated scenes from prompts. Add motion directly without touching any 3D modeling tool.

7. Kling AI

Supports video generation with reference images. Works well for keeping motion cinematic and character identity intact.

8. Pika Labs

Great for short animated scenes. It’s fast, and supports text-to-video or image-to-video generation for stylized animation.

9. CapCut (Free version)

Assemble shots, cut scenes, and add music. Also good for final exports and transitions.

10. Runway Timeline Editor

If you’re using Runway for generation, its Timeline Editor makes it easy to arrange multiple AI clips in sequence.

11. ElevenLabs (Free tier)

Generate clean voiceovers for each line of dialogue. Use mono WAV format for better lip-sync accuracy.

12. Wav2Lip

Turn a static face into a talking character. This AI lip-sync tool matches mouth movement to your audio without keyframes.

13. SadTalker

Another free tool that adds lip sync and facial motion to a single image, ideal for expressive shots or reactions.

14. Character LoRA Tools

Use these for deeper identity locking—especially when creating multiple scenes with the same character.

15. Seed Locking & CFG Settings

These are features within Stable Diffusion or ComfyUI that help lock facial geometry and visual style across outputs.

16. Reference Injection (in Runway, Pika, Kling)

Most AI video tools now support image references. This helps retain character look in every frame generated.

17. DaVinci Resolve (Free version)

For post-production polish. Add transitions, adjust timing, insert music, and export in 1080p or higher.

18. Custom Prompt Templates

Not a tool but a key technique. Use consistent prompt language to describe your scenes, lighting, and motion type across tools.

These tools cover every step including scriptwriting, character design, scene generation, editing, voice, lip-sync, and export. You don’t need animation experience or a big budget. You only need this stack and a good story.

1. End-to-End Free AI Cartoon Pipeline (Script to Final Render)

Step 1: Script & Dialogue Generation (Text Foundation)

Tools:

– ChatGPT (Free tier)

– Google Docs

Start with a tightly structured script. For AI animation, scripts must be scene-aware, not just dialogue-based.

Best Practice Script Format:

[Scene 1 – Living Room]

Character A: “We have to leave now!”

Character B: “But it’s raining outside.”

[Scene 2 – City Street]

Why this matters: AI video models like Runway Gen-3*, **Kling**, and *Sora-style diffusion engines interpret scene transitions as latent resets. Clear segmentation improves temporal stability and prevents visual drift.

Step 2: Character Design with Latent Consistency

Tools:

– Stable Diffusion (Free via Automatic1111 or ComfyUI)

– Custom Cartoon Checkpoint (Open-source)

– Optional: Character LoRA

Instead of modeling characters in 3D, you generate pseudo-3D characters using diffusion models trained on stylized 3D aesthetics.

Key Technical Concepts:

– Seed Parity: Locking the same seed across generations to preserve facial geometry

– CFG Stability: Keeping CFG between 6–8 to avoid over-stylization

– Euler a Scheduler: Best balance for cartoon coherence

Recommended Prompt Structure:

Stylized 3D cartoon character, Pixar-style proportions,

soft global illumination, clean topology appearance,

consistent facial features, neutral pose

Generate:

– Front view

– 3/4 view

– Side view

These become your character reference anchors for all future scenes.

Step 3: Scene Generation Without 3D Software

Tools:

– Runway Gen-3 (Free credits)

– Kling AI (Free tier)

– Pika Labs

Instead of building environments, you generate cinematic cartoon scenes directly from text prompts.

Scene Prompt Example:

Wide shot of a cartoon living room, stylized 3D animation,

soft lighting, vibrant colors, shallow depth of field

Critical Setting:

– Enable image-to-video conditioning using your character reference

– Keep motion strength under 40% to avoid identity drift

This simulates a 3D animated environment while remaining fully AI-driven.

Step 4: Shot Assembly & Temporal Control

Tools:

– Runway Timeline Editor

– CapCut (Free)

AI video works best in short controlled shots (3–6 seconds).

Why:

– Reduces temporal hallucination

– Maintains facial coherence

– Improves lip-sync accuracy later

Export each shot individually before moving to voice integration.

2. Automatic Lip Sync Without Manual Animation

Step 5: Voice Acting with AI or Real Voice

Tools:

– ElevenLabs (Free tier)

– Your own microphone

Export clean WAV files per dialogue line.

Important:

– 16kHz or 44.1kHz

– Mono audio

– No background noise

Clean audio dramatically improves lip-sync accuracy.

Step 6: AI Lip Sync (No Keyframes)

Tools:

– Wav2Lip (Open-source)

– SadTalker (Free)

This is where the magic happens.

Wav2Lip Workflow:

1. Input video clip

2. Input voice WAV

3. Model maps phonemes → mouth shapes

The system uses audio-driven viseme alignment, eliminating manual facial animation.

Best Results Tips:

– Use close-up or medium shots

– Neutral facial expressions

– Avoid extreme head turns

This produces broadcast-level lip sync without touching a 3D rig.

3. Maintaining Consistent 3D Characters Across Episodes

Consistency is the hardest problem in AI animation and the most solvable with the right controls.

Technique 1: Seed Locking

Every character shot must reuse Same seed, resolution and scehduler

This maintains latent facial geometry.

Technique 2: Character LoRAs (Optional but Powerful)

Tools:

– Kohya GUI (Free)

Train a LoRA using:

– 15–25 character images

– Multiple angles

– Neutral lighting

Once trained, your character becomes a portable identity layer usable across:

– Runway

– ComfyUI

– Future AI models

Technique 3: Reference Injection

Most AI video tools now support reference frames.

Always:

– Upload your original character image

– Set reference strength between 60–80%

This anchors the diffusion process to your established design.

Final Render & Export

Tools:

– CapCut

– DaVinci Resolve (Free)

Assemble shots, add:

– Music

– Sound effects

– Scene transitions

Export settings:

– 1080p

– 24fps

– High bitrate

You now have a TV-quality 3D cartoon animation created entirely with free AI tools.

Why This Workflow Works

Traditional 3D animation requires:

– Years of training

– Expensive software

– Large teams

This AI-first pipeline replaces:

– Rigs → Diffusion Latents

– Keyframes → Temporal Conditioning

– Facial animation → Audio-driven lip sync

The result is democratized animation production.

If you can write a script, you can now create a cartoon series.

Frequently Asked Questions

Q: Do I need any 3D animation experience to use this workflow?

A: No. The entire pipeline replaces traditional 3D modeling, rigging, and animation with AI-driven video generation and diffusion-based consistency controls.

Q: Can this be used for YouTube or streaming platforms?

A: Yes. The final output meets 1080p broadcast standards and is suitable for YouTube, social platforms, and episodic content.

Q: How do I prevent my character from changing faces between scenes?

A: Use seed parity, reference image injection, and optionally a character LoRA to lock facial geometry across generations.

Q: Is Wav2Lip really accurate enough for professional use?

A: Yes. When paired with clean audio and stable facial shots, Wav2Lip produces industry-grade lip synchronization without manual animation.