Character-Driven 3D Animation for Music Videos: How Viral Characters, AI Timing, and Lip-Sync Engineering Drive 800K+ Views

This 800K+ view music video proves character-driven 3D animation sells.

Scroll through any viral animated music video, and you’ll notice a consistent pattern: audiences don’t just remember the song, they remember the character. In an era where AI-generated visuals are abundant, character-driven animation is the differentiator that converts passive viewers into repeat fans. For 3D animators, content creators, and music video producers, the core challenge isn’t rendering quality anymore, it’s creating engaging 3D characters whose performance is tightly synchronized with music and emotionally legible at internet speed.

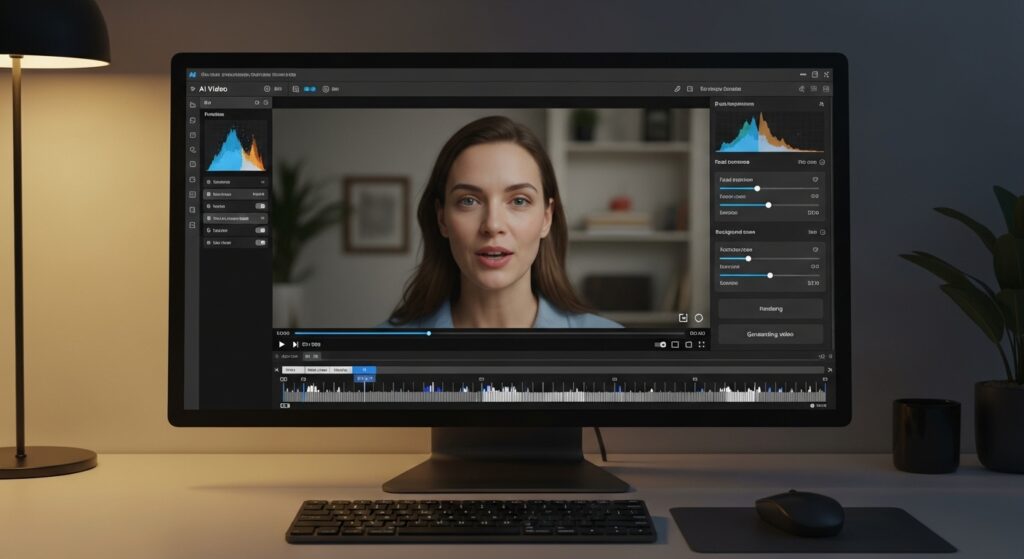

This deep dive breaks down how modern AI video pipelines—using tools like Runway, ComfyUI, and emerging video models such as Sora-style latent video generators—are used to build viral character-based music videos. We’ll focus on personality expression, lip-sync and timing, and character design systems that scale fan engagement.

Why Character-Driven 3D Animation Wins in Music Videos

Music videos are fundamentally performance-driven. In live-action, that performance comes from the artist. In animated music videos, the character is the artist. Viral success happens when viewers subconsciously attribute intention, emotion, and rhythm awareness to a digital character.

From a technical perspective, this means your animation system must solve three problems simultaneously:

1. Performance Consistency – The character must feel like the same “entity” across shots, scenes, and even multiple videos.

2. Musical Intelligence – Movement, facial animation, and camera motion must align with beat structure, tempo, and vocal phrasing.

3. Visual Memorability – The character’s design must be readable in a single frame or thumbnail.

AI video tools accelerate production, but without intentional control—latent consistency, seed parity, and scheduler tuning, you’ll get visually impressive yet emotionally hollow results.

Expressing Character Personality with Modern 3D and AI Animation Pipelines

Character personality is expressed through motion choices, not just modeling detail. In traditional pipelines, this meant hand-keyed animation. Today, AI-assisted workflows let creators focus on direction rather than raw animation labor.

Latent Consistency as Character Identity

When using diffusion-based video generation (Runway Gen-3, ComfyUI AnimateDiff, or Kling-style models), character personality begins in the latent space. You must maintain latent consistency across shots so the character’s proportions, facial structure, and micro-expressions don’t drift.

In ComfyUI, this often involves:

– Fixed character LoRA or IP-Adapter embeddings

– Seed Parity across shots where continuity matters

– Controlled noise injection instead of full randomization

By locking key latent variables, you prevent the character from becoming a different “actor” in each clip.

Motion Language and Personality

Personality is communicated through timing and exaggeration:

– A confident character uses wider poses and slower ease-ins

– A comedic character uses sharp anticipations and overshoot

– A melancholic character minimizes vertical motion and favors head tilts

AI motion systems respond well when you explicitly encode these traits in prompt logic and motion references:

> “confident stage performer, wide stance, rhythmic shoulder movement, expressive hand gestures synced to beat”

When paired with reference-driven motion control (Runway motion brush or ComfyUI control nets), this produces repeatable personality traits instead of generic dancing.

Lip-Sync, Timing, and Music Alignment Using AI Schedulers and Latent Control

Lip-sync is where most animated music videos fail. Viewers will forgive stylized visuals—but not broken timing.

Audio-Driven Animation Fundamentals

Modern AI pipelines treat audio as a first-class control signal. Successful creators extract structured data from the music track:

– BPM and beat grid

– Vocal phoneme timing

– Section markers (verse, chorus, drop)

This data informs both facial animation and body motion.

Euler A Schedulers and Temporal Stability

In diffusion-based video generation, the scheduler determines how motion evolves across frames. For music videos, Euler A schedulers are often preferred because they preserve sharper transitions, critical for fast lyrical delivery.

Key techniques include:

– Lower denoise values for facial regions to stabilize mouth shapes

– Higher denoise for body motion during chorus sections

– Segment-based rendering (verse vs chorus) with scheduler adjustments

This prevents the common issue where lips appear to “swim” or drift off-beat.

Phoneme-Accurate Lip Sync

For high-performing videos, creators often combine AI video generation with dedicated lip-sync systems:

– Generate a clean facial performance pass

– Use phoneme-aligned audio-to-viseme models

– Composite or guide the diffusion output using face control nets

Runway’s facial motion tools and ComfyUI’s advanced control pipelines allow creators to lock mouth shapes to audio timing while still benefiting from AI-generated expressiveness.

Designing Recognizable Characters That Build Fan Engagement and Virality

Viral animated music videos don’t just generate views—they generate fandom. This happens when characters are instantly recognizable.

Readability Over Realism

High-engagement characters prioritize:

– Clear silhouettes

– Distinct color palettes

– Exaggerated facial features

From an AI standpoint, this improves generation stability. Models are more consistent when characters have strong visual anchors.

Thumbnail Engineering

Most music video discovery happens in thumbnails. Successful creators design characters with:

– Large, expressive eyes

– High-contrast facial lighting

– Poses that communicate emotion without context

These choices are intentional and baked into prompt presets and character LoRAs.

Fan Memory and Iteration

Once a character resonates, repetition matters. Using the same character across multiple songs builds recognition faster than constantly inventing new designs.

This is where seed parity and consistent latent embeddings become brand assets. Your character becomes a reusable digital performer.

End-to-End Workflow: From Music Track to Viral 3D Animated Music Video

Here’s a simplified high-performing workflow used by many viral creators:

1. Music Analysis

– Extract BPM, beat grid, and vocal timing

2. Character Lock-In

– Finalize character design

– Create LoRA or reference embedding

– Establish seed parity rules

3. Performance Planning

– Define personality traits

– Map motion intensity to song sections

4. AI Video Generation

– Use Runway or ComfyUI for base animation

– Apply Euler A schedulers for musical precision

5. Lip-Sync Refinement

– Stabilize facial regions

– Align visemes to vocals

6. Editing and Release

– Cut on beats

– Optimize thumbnail with character focus

This pipeline prioritizes performance clarity over raw visual complexity—and that’s what drives repeat views.

Final Thoughts

The success of 800K+ views animated music videos isn’t accidental. It’s the result of treating characters as performers, not props. AI video tools like Runway and ComfyUI dramatically reduce production friction, but virality still depends on intentional control: latent consistency, scheduler tuning, and character-driven design.

For 3D animators and music video producers, the opportunity is clear. Build characters audiences can emotionally track, sync them tightly to music, and let AI handle the heavy lifting. The future of animated music videos belongs to creators who understand that performance—not pixels, is what sells.

Frequently Asked Questions

Q: What makes character-driven animated music videos more viral than abstract visuals?

A: Characters provide emotional continuity. Viewers form a relationship with a recognizable performer, which increases repeat views, shares, and fan attachment compared to abstract or purely aesthetic visuals.

Q: Which AI tools are best for creating 3D animated music videos?

A: Runway and ComfyUI are popular for AI video generation and motion control, while additional lip-sync and audio analysis tools are often integrated for precise timing and facial animation.

Q: How do you keep a character consistent across multiple AI-generated shots?

A: By maintaining latent consistency using fixed seeds, character LoRAs or embeddings, controlled noise injection, and consistent prompt structures across all shots.

Q: Why are Euler A schedulers commonly used in animated music videos?

A: Euler A schedulers preserve sharper temporal transitions, making them well-suited for music videos where precise beat alignment and fast vocal delivery are critical.

Q: Can AI-generated characters become long-term digital performers?

A: Yes. With consistent design, latent control, and repeated appearances, AI-generated characters can function as reusable digital artists that build audience loyalty over time.