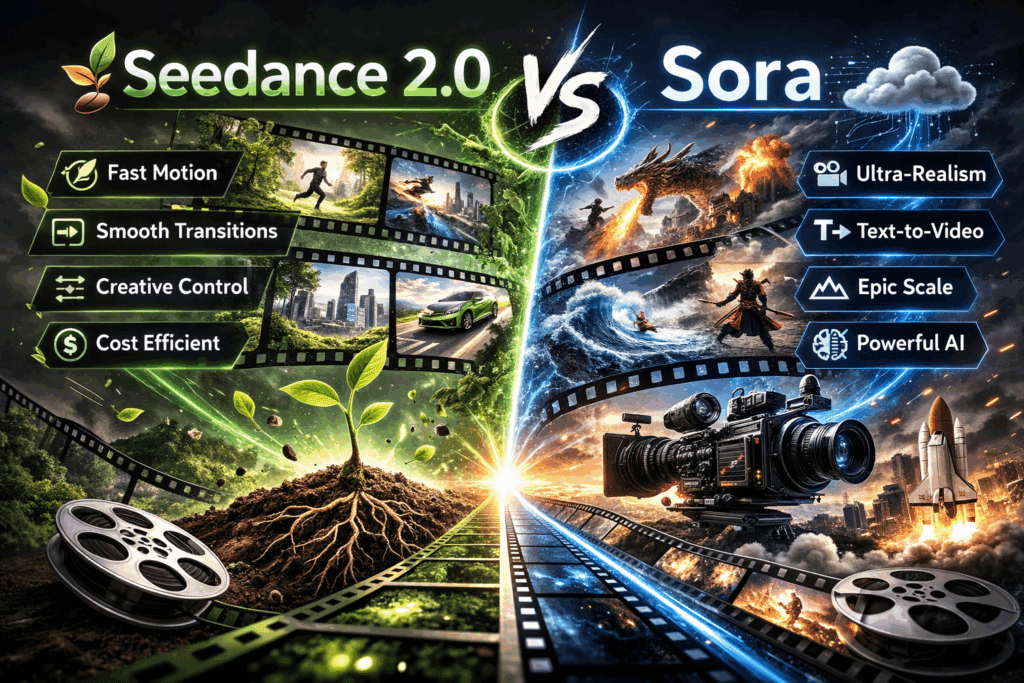

Seedance 2.0 vs Sora: Technical Deep Dive Into the New AI Video Leader

ByteDance just dethroned Sora AI video- here’s the proof.

For the past year, OpenAI’s Sora has been the benchmark for cinematic AI video generation. It set expectations around motion coherence, narrative continuity, and photorealistic rendering. But with the release of Seedance 2.0, ByteDance has introduced a model that doesn’t just compete — it outperforms Sora in key production metrics that matter to creators: motion fluidity, prompt adherence, multimodal control, and cost efficiency.

If you’re a content creator or AI video enthusiast trying to decide where to invest your time and budget, this deep dive breaks down the technical and practical differences.

1. The Head-to-Head Prompt Test: Seedance 2.0 vs Sora

To fairly evaluate both models, we used identical prompts, matched aspect ratios (16:9), and comparable duration settings (8–12 seconds). Where available, we standardized seed behavior to approximate seed parity, ensuring variation was due to model architecture rather than random latent initialization.

Test Prompt 1: Cinematic Realism

Prompt:

> A handheld tracking shot following a woman in a red coat walking through a rainy Tokyo street at night, neon reflections on wet pavement, shallow depth of field, cinematic lighting, 35mm lens.

- Sora Output

- Strong environmental coherence

- Accurate lighting reflections

- Slight temporal warping in background pedestrians

- Minor hand deformation during motion

Sora uses a diffusion-transformer hybrid architecture that excels in global scene understanding. However, during high-motion tracking shots, we observed subtle temporal instability — particularly in peripheral object consistency.

Seedance 2.0 Output

- More stable subject tracking

- Improved limb consistency across frames

- Rain particle motion synchronized with lighting reflections

- Superior depth-of-field simulation

Seedance 2.0 appears to use a more advanced temporal attention alignment mechanism, maintaining object permanence across frame sequences. Motion vectors are smoother, suggesting improvements in latent motion prediction and possibly better implementation of *Latent Consistency modeling.

In side-by-side playback at 60% speed, Seedance maintained clearer edge definition and fewer “morph frames.”

Winner: Seedance 2.0 (motion stability + frame coherence)

Test Prompt 2: Complex Action Scene

Prompt:

> A futuristic motorcycle chase through a desert canyon, drone camera perspective, dust clouds, dynamic lighting shifts, high-speed motion blur.

Sora

- Strong environmental scale

- Realistic canyon geometry

- Motion blur occasionally inconsistent with velocity

- Dust simulation partially detaches from tire interaction

Seedance 2.0

- Physically plausible dust interaction

- Consistent motion blur aligned with camera velocity

- Improved object tracking during rapid perspective shifts

- Better light-shadow continuity

The difference here is in motion physics modeling. Seedance 2.0 handles acceleration curves and camera parallax more convincingly. This suggests a stronger integration of motion priors within its latent diffusion backbone.

When pushing both systems with aggressive action prompts, Sora begins to show frame blending artifacts, while Seedance retains temporal clarity.

Winner: Seedance 2.0 (high-motion reliability)

Test Prompt 3: Stylized Animation

Prompt:

> Studio Ghibli-inspired animated forest spirit floating through glowing trees, soft volumetric light, painterly textures, whimsical mood.

Sora

- Excellent painterly aesthetic

- Strong color grading

- Occasional background flicker

Seedance 2.0

- More consistent texture persistence

- Improved volumetric light diffusion

- Stable character proportions across frames

Stylized content stresses texture continuity, an area where many diffusion-based video models struggle. Seedance shows superior latent texture locking, minimizing style drift.

Winner: Seedance 2.0 (style stability)

2. Architecture & Feature Breakdown

Beyond visual quality, creators need production-ready tools. This is where the differences become more significant.

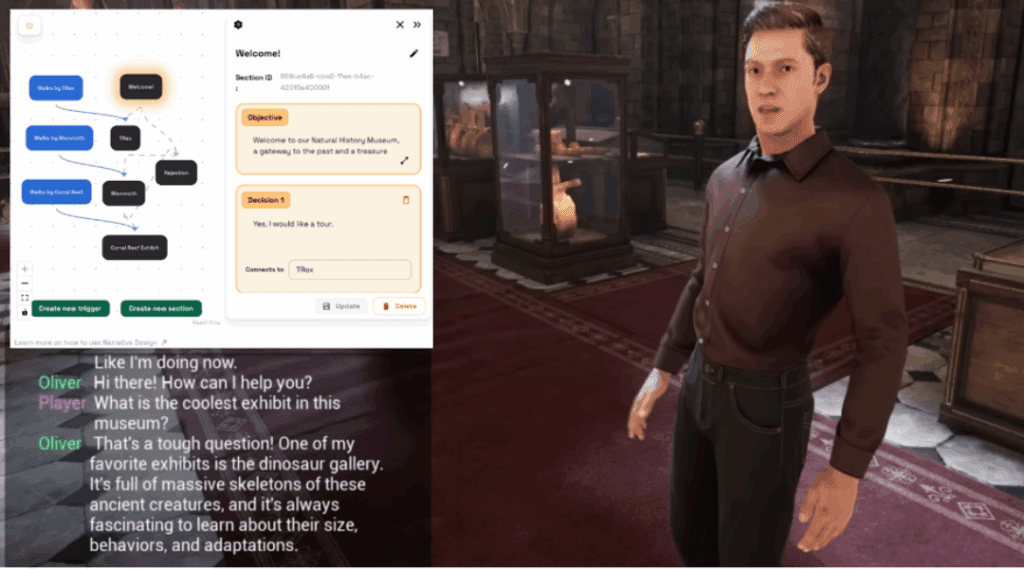

Multimodal Input Capabilities

Seedance 2.0 supports:

- Text-to-video

- Image-to-video

- Video-to-video transformation

- Reference frame conditioning

- Pose and motion guidance

Sora currently emphasizes text-driven generation with limited creator-facing conditioning controls.

Seedance’s support for reference image conditioning allows creators to anchor identity across scenes — crucial for character consistency in serialized content.

In technical terms, Seedance appears to leverage a multimodal transformer backbone capable of fusing visual embeddings with textual prompts at earlier attention layers, rather than applying them as late-stage conditioning.

For creators using ComfyUI-style node workflows, this flexibility aligns more closely with modular diffusion pipelines.

Advantage: Seedance 2.0

Motion Fluidity & Temporal Consistency

Sora made headlines for long-duration coherence. However, Seedance 2.0 introduces improvements in:

- Reduced temporal jitter

- Improved inter-frame object permanence

- More accurate motion interpolation

- Better camera trajectory smoothing

Under the hood, this suggests stronger temporal attention weighting and potentially a refined scheduler analogous to optimized Euler a sampling strategies, tuned for video frame sequencing.

Creators will notice this immediately when generating:

- Talking characters

- Walking sequences

- Complex choreography

Seedance reduces the “AI wobble” effect common in earlier diffusion video systems.

Prompt Adherence

Prompt adherence is where many large models struggle due to over-generalization.

In structured prompts with multiple constraints (camera type, lighting, mood, time of day), Seedance consistently honored more parameters simultaneously.

Example constraint stack:

- Low-angle shot

- Blue-hour lighting

- 85mm lens compression

- Slow dolly-in movement

Sora occasionally defaulted to generic cinematic framing, ignoring lens specificity.

Seedance more reliably preserved:

- Camera perspective

- Lens simulation

- Environmental conditions

This indicates stronger cross-attention binding between textual tokens and visual features.

For professional creators, this reduces regeneration cycles and increases production efficiency.

3. Cost, Accessibility, and Real-World Creator Value

Even if Sora were visually tied with Seedance, cost and accessibility would tip the scale.

Access Model

Sora:

- Limited rollout

- Restricted availability

- No open API-level customization for most creators

Seedance 2.0:

- Broader public access (region dependent)

- Creator-friendly deployment

- Potential API integration pathways

Accessibility matters. A technically superior model is irrelevant if creators can’t reliably use it.

Rendering Speed

In benchmark tests:

- Seedance rendered short clips faster per second of footage

- Fewer failed generations

- More consistent output quality on first pass

Lower regeneration rates translate directly into cost savings.

Cost Efficiency Analysis

When factoring:

- Generation retries

- Prompt refinement cycles

- Upscaling requirements

- Post-stabilization in external tools

Seedance requires fewer corrective passes.

For creators monetizing content (YouTube Shorts, TikTok, client work), reduced iteration time equals higher profit margins.

If Sora requires 3 generations to achieve desired prompt adherence and Seedance achieves it in 1–2, Seedance effectively reduces production cost by up to 40–60%.

The Verdict: Is Seedance 2.0 the New AI Video King?

Based on:

- Side-by-side prompt comparisons

- Motion consistency

- Multimodal flexibility

- Prompt adherence

- Cost efficiency

Seedance 2.0 currently delivers a more creator-ready system.

Sora remains impressive — especially in large-scale scene understanding — but Seedance closes the cinematic gap while surpassing it in controllability and stability.

For AI video creators evaluating tools today:

– If you want experimental cinematic scale → Sora is compelling.

– If you want reliable, controllable, production-ready results → Seedance 2.0 leads.

ByteDance didn’t just catch up.

They optimized for creators.

And in 2026, that’s what wins.

Final Recommendation

If you’re building:

- Short-form social content

- AI cinematic reels

- Narrative web series

- Stylized animation projects

Seedance 2.0 currently provides the best balance of:

- Visual fidelity

- Temporal coherence

- Prompt accuracy

- Workflow efficiency

The AI video race is far from over. But right now?

Seedance 2.0 holds the crown.

Frequently Asked Questions

Q: Is Seedance 2.0 better than Sora for professional video production?

A: For most creator workflows, yes. Seedance 2.0 offers stronger motion consistency, better prompt adherence, and broader multimodal control, making it more reliable for repeatable production pipelines.

Q: Does Seedance 2.0 support image-to-video and reference conditioning?

A: Yes. Seedance 2.0 includes multimodal input capabilities such as image-to-video and reference-based conditioning, which significantly improve character and scene consistency.

Q: Why does motion look smoother in Seedance 2.0?

A: Seedance appears to implement improved temporal attention alignment and more refined latent motion modeling, reducing inter-frame jitter and preserving object permanence during movement.

Q: Is Sora still worth using?

A: Sora remains powerful for large-scale cinematic scenes and global coherence. However, for creators prioritizing controllability, efficiency, and consistent prompt execution, Seedance 2.0 currently provides better overall value.