How Chinese Studios Produce 300+ Episodes of 3D Animation: Scalable Pipelines, Asset Reuse, and AI-Assisted Visual Engines

307 episodes of 3D animation: how Chinese studios do it.

Producing a single season of high-quality 3D animation is already a logistical and creative challenge. Producing more than 300 episodes—often with weekly release schedules, consistent visual fidelity, and expanding storylines—requires an entirely different production philosophy. Chinese animation studios specializing in long-running 3D series have quietly solved this problem by combining modular asset design, rigid pipeline discipline, and increasingly, AI-assisted visual engines.

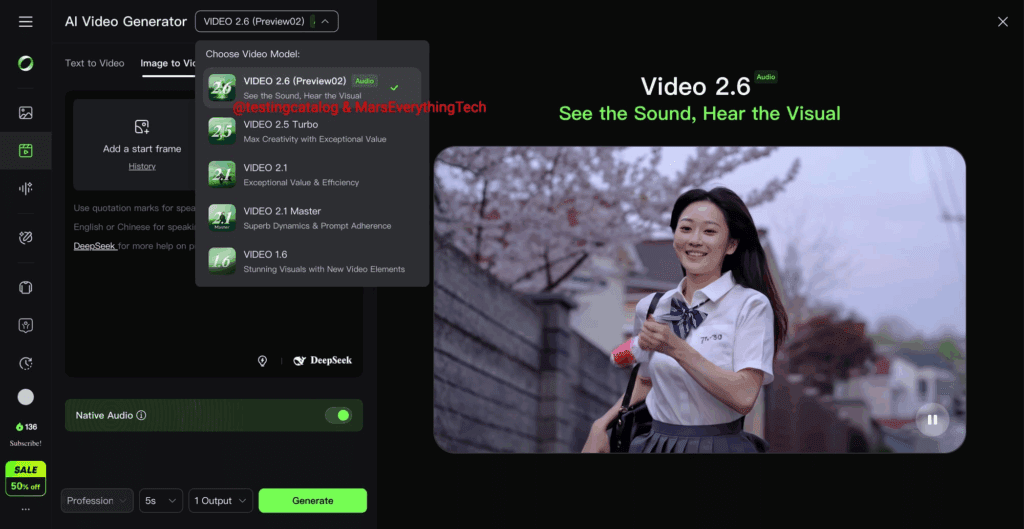

This article breaks down how these studios approach serialized 3D animation production at extreme scale, with a focus on asset reuse, animation quality control, and multilingual output. We will also connect traditional 3D pipelines with modern AI video systems such as Runway, Kling, Sora, and ComfyUI-style node-based workflows to explain how these techniques can be adapted globally.

Episodic 3D Animation at Extreme Scale: The 300-Episode Problem

The core challenge of serialized 3D animation is not visual creation; it is visual consistency over time. When a show runs for 200–300+ episodes, the enemy becomes entropy: small changes in lighting, rig updates, shader tweaks, or animation style gradually erode continuity.

Chinese studios address this by treating a long-running series less like a sequence of films and more like a live service product. Key principles include:

– Locked visual bibles that are enforced technically, not just artistically

– Versioned assets with backward compatibility

– Episodic pipelines that prioritize predictability over experimentation

In AI terminology, this is similar to enforcing latent consistency across generations. Whether you are rendering in a traditional engine or generating shots via diffusion-based video tools, the goal is the same: identical inputs should always produce visually equivalent outputs.

Asset Reuse, Modular Rigging, and Latent Consistency Across Long-Running Series

Modular Character Systems

Chinese 3D series rarely build characters as single, monolithic rigs. Instead, they use modular character systems:

– Shared base skeletons across entire character classes

– Swappable heads, costumes, accessories, and shaders

– Parameterized facial rigs rather than unique blendshape sets

This mirrors how AI video tools reuse latent structures. In ComfyUI, for example, maintaining the same base model, LoRA stack, and seed parity across shots ensures character identity stability. Chinese studios do the same in Maya or proprietary engines by locking rig hierarchies and deformation orders.

Rig Version Control and Backward Compatibility

Once a rig is approved for episode 1, it is rarely replaced. Instead:

– New deformation layers are added non-destructively

– Old animation data must always retarget cleanly

– Rig updates are validated against previous episodes

This approach is conceptually similar to maintaining seed parity in AI video generation. If a studio breaks backward compatibility, previously generated animation data becomes unusable, dramatically increasing production cost.

Environment and Prop Libraries

Long-running Chinese 3D series rely heavily on environment reuse:

– Core locations (cities, sect halls, landscapes) are built once

– Lighting presets are episode-locked

– Time-of-day changes are procedural, not manual

In AI engines like Runway or Kling, this is analogous to environment anchoring—keeping the same latent background while only modifying foreground motion prompts. The result is faster iteration without visual drift.

Maintaining Animation Quality at Scale with AI-Assisted Visual Engines

The Quality Plateau Strategy

Instead of trying to raise visual quality every season, Chinese studios define a “quality plateau” early in production. This is the highest quality they can sustain for hundreds of episodes without schedule collapse.

Key characteristics:

– Fixed render budgets per episode

– Standardized camera language

– Reusable animation cycles (walks, combat loops, crowd motion)

AI video tools increasingly adopt similar constraints. For example, Sora-style long-form generation benefits from limiting camera volatility and enforcing consistent shot grammar to avoid temporal artifacts.

AI-Assisted Inbetweening and Motion Stabilization

Many studios now use AI-assisted tools for:

– Motion smoothing

– Physics correction

– Cloth and hair stabilization

In AI terms, this is temporal coherence enforcement. Kling and Runway apply similar techniques internally by aligning frame-to-frame latent states, reducing flicker and deformation drift.

When integrated into traditional pipelines, AI-assisted solvers act like a post-simulation consistency pass rather than a creative generator.

Production Scheduling, Simulation Reuse, and Euler-Based Timing Control

Animation Scheduling at Industrial Scale

Producing 300+ episodes requires parallelization:

– Multiple episode units working simultaneously

– Shared animation libraries across teams

– Strict delivery checkpoints

Chinese studios often break episodes into micro-deliverables that resemble AI batch jobs. Each task has known inputs and deterministic outputs.

Simulation Reuse and Deterministic Playback

Simulations (cloth, hair, crowds, FX) are rarely recomputed from scratch. Instead:

– Cached simulations are reused across episodes

– Variations are introduced via parameter offsets

– Euler-based schedulers control timing offsets

In AI video systems, Euler schedulers determine how latent states evolve across frames. Similarly, animation timing curves in long-running series are carefully controlled to prevent cumulative drift.

By treating time as a controlled variable rather than an emergent one, studios maintain stability over hundreds of episodes.

Multilingual Subtitle and Localization Pipelines for Global Distribution

Subtitles as a First-Class Asset

Chinese studios design subtitles early, not as an afterthought:

– Dialogue timing is locked before final animation

– Subtitle layers are version-controlled

– Text-safe zones are enforced in the layout

This is critical for global distribution, especially for platforms that require multiple subtitle languages.

AI-Assisted Localization

Modern pipelines increasingly use AI for:

– Initial subtitle translation

– Timing alignment across languages

– Lip-flap approximation for dubbed versions

When combined with AI video tools, subtitle data can even influence generation. For example, text timing can be used as a control signal to enforce scene pacing, similar to how prompt weights guide AI video outputs.

What Global Studios Can Learn from Chinese 3D Animation Pipelines

The success of Chinese studios in producing 300+ episode 3D series is not about cutting corners, it is about eliminating unnecessary variance.

Key takeaways:

– Lock visual decisions early and enforce them technically

– Design assets for reuse, not uniqueness

– Treat animation like a system, not a sequence of art pieces

– Use AI as a stabilizer, not just a generator

As AI video engines like Runway, Kling, Sora, and ComfyUI continue to mature, the line between traditional 3D pipelines and generative systems will blur. Studios that adopt the long-running, consistency-first mindset pioneered by Chinese 3D animation producers will be best positioned to create serialized content at a global scale.

The real lesson of 307 episodes is not speed—it is control.

Frequently Asked Questions

Q: How do Chinese studios keep characters consistent across hundreds of episodes?

A: They use modular rigs, shared skeletons, strict version control, and backward-compatible rig updates, similar to maintaining latent consistency and seed parity in AI video systems.

Q: Do Chinese studios use AI video tools like Runway or Sora directly?

A: Some use proprietary AI systems, but the underlying principles—temporal coherence, deterministic outputs, and reuse of latent structures—are conceptually aligned with tools like Runway, Kling, and Sora.

Q: Why is asset reuse so critical for long-running 3D animation?

A: Asset reuse reduces production time, prevents visual drift, and allows teams to scale output without increasing complexity, which is essential for 200–300+ episode series.

Q: How are subtitles handled for global releases?

A: Subtitles are treated as first-class assets with early timing lock, AI-assisted translation, and version control to support multilingual distribution.

Q: What can AI video creators learn from Chinese 3D animation pipelines?

A: AI creators can adopt consistency-first workflows, deterministic scheduling, and controlled variation to produce longer, more stable episodic content.