GEMINI AI Embedding Model 3.0 + VEO 3.1: Google Just Shocked Everyone

A leaked internal Google document has everyone talking. This suggests that Gemini AI Embedding Model 3.0 + VEO 3.1 (often shortened to “Gemini 3.0 + VEO 3.1”) might be launching very soon. This has sparked a lot of discussion about what it means for GPT-5, our daily workflows. And also the overall AI generator race. So, let’s cut through the noise and look at what we actually know. In addition to what is rumored, and whether you should be paying attention right now.

Key Takeaways

- A leaked Google document suggests Gemini 3.0 could launch on October 22, 2025.

- The document’s format matches Google’s internal planning style, but the content isn’t confirmed.

- Code references and Google’s recent product releases point to ongoing development in this area.

- Potential features include advanced agent capabilities, improved reasoning, better graphics handling, and enhanced multimodal functions.

- Gemini 3.0 is expected to compete directly with GPT-5. Potentially offering advantages in specific areas like enterprise integration and multimodal tasks.

- Gemini 3.0 is likely an ecosystem of models (Pro, Flash, Nano, VEO, etc.) rather than a single release.

- Therefore, while the launch date is plausible, it’s not official. Users should wait for confirmation before making major workflow changes.

The Gemini 3.0 Leak: What’s the Story?

It all started when a blurry photo of what looked like an internal Google planning table appeared online. This table showed a timeline with product milestones, and one entry mentioned “Gemini 3.0 launch moment” with a date: October 22nd, 2025. Naturally, tech news outlets jumped on it. Some called the document questionable, while others noted it looked somewhat legitimate but couldn’t be verified. Even experts pointed out that while the layout matched Google’s internal documents, the information within might not be accurate. Despite those caveats, many ran with the story, adding disclaimers.

It is worth noting that AI updates are happening constantly these days. Consequently, to keep up without getting overwhelmed, tools that track these changes can be really helpful. For example, when official details drop, you can use VidAU AI to quickly repurpose this article into short explainer videos for Shorts/Reels/LinkedIn—so your audience gets timely updates without a full rewrite.

What We Actually Know

So, why is this leak getting so much attention if it’s not confirmed? Because even if the exact date is off, the features and model names mentioned in the leak align with actual code commits, public beta information, and Google’s recent actions. In short, there’s definitely a signal in the noise.

What Is Known:

- Gemini 2.5 Pro Exists: This is the current baseline, launched in March 2025. Google has been releasing updates rapidly, including 2.5 Flash, 2.5 Compute, Nano, and enterprise tools in recent months. A 3.0 release wouldn’t be out of the blue.

- Code References Are Real: Developers have found mentions of “Gemini Beta 3.0 Pro” and “Gemini Beta 3.0 Flash” in Google’s API documentation and beta builds. This indicates Google is testing something internally under the “3.0” label.

- Thinking Capability is a Focus: The leaked document mentions enhanced reasoning, and we know Google is working on advanced reasoning models. With competitors like OpenAI and Anthropic pushing their own reasoning capabilities, a focus on this for Gemini makes strategic sense.

- Gemini Enterprise Launched: This product, released in October, is Google’s move into corporate AI agents that can handle tasks like booking meetings and drafting emails within a company’s systems. A flagship model like Gemini 3.0 would be a natural fit to support this.

- Competitive Pressure: OpenAI released its agent features in July and GPT-5 in August, setting a higher bar. Google needs to respond, and a late October launch would be a smart move, coming after the GPT-5 hype and before companies finalize their budgets for the next year.

Rumored Features: What Gemini 3.0 Could Include

Now, let’s look at what people think Gemini 3.0 might offer. Take this with a grain of salt, however it’s based on educated guesses, benchmarks, and hints from Google’s own roadmap.

Agent Capabilities

This is a big one. Gemini 3.0 is expected to be a true agentic model, meaning it can take actions like booking flights or updating spreadsheets, not just answer questions. Think of it as a supercharged version of current AI assistants, integrated directly into Google Workspace.

Improved Reasoning

The leak mentions an “enhanced reasoning mode.” If this is true, it could mean better performance on complex tasks like coding and research, with fewer errors.

Better Graphics Handling

One specific claim is that Gemini 3.0 will handle SVG files better than its predecessor. This could improve workflows for designers and developers working with diagrams and UI elements.

Multimodal Enhancements

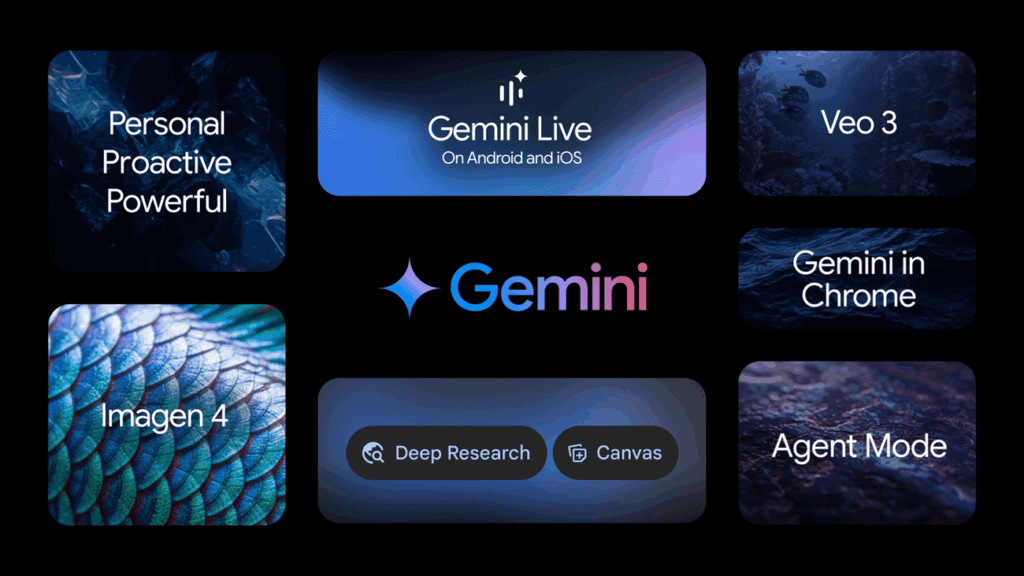

Google has been developing separate models for video (VEO), images (Nano Banana), and on-device processing (Nano). Gemini 3.0 is expected to unify these, allowing for real-time video analysis, better image generation, and smoother transitions between cloud and local processing.

Expanded Context Windows

While Gemini 2.5 already supports large context windows, Gemini 3.0 might push this even further, which is useful for analyzing long documents or complex datasets.

Speed

Flash variants are designed for speed. If Gemini Beta 3.0 Flash is real, expect very quick responses, which is becoming standard for user expectations.

Gemini 3.0 vs. GPT-5: How They Stack Up

GPT-5 set a new standard when it launched. So, how might Gemini 3.0 compete?

Reasoning

Gemini 3.0’s rumored enhanced thinking mode could potentially match or even beat GPT-5, especially in technical areas. While GPT-5 might still lead in pure logic, a close competitor would be a win for Google.

Speed

GPT-5 has a fast mode, but Gemini 3.0 Flash variants are expected to be even faster. This could be a key differentiator, particularly for enterprise applications where speed is critical.

Multimodal

Google has a strong advantage here due to its ownership of platforms like YouTube and Google Photos, and its development of advanced video and image models. If Gemini 3.0 effectively integrates these, it could surpass GPT-5 in multimodal capabilities.

Context Windows

Both models are pushing the limits. Gemini’s theoretical 2 million token capacity is impressive, but GPT-5’s 128,000 tokens might be more practical for many uses. This is likely a tie for now.

Agents

OpenAI’s agent features are functional but can be clunky. Gemini Enterprise promises native integration within Google Workspace. If this works well, it could be a significant advantage for Google’s existing user base, as users wouldn’t need to switch platforms.

The Google Models Behind Gemini 3.0

Gemini isn’t just one model; it’s an ecosystem. Accordingly, understanding the different parts helps predict what 3.0 will do:

- Gemini 2.5 Pro: The core model, trained on a vast amount of data.

- Flash Variants: Optimized for low latency and speed, suitable for mobile and edge devices.

- Nano: The on-device model for tasks like voice transcription and quick replies on phones and Chromebooks.

- VEO: Google’s video generation model, expected to be integrated for creating video content.

- Nano Banana: Handles image generation, competing with tools like Midjourney.

Gemini 3.0 is likely to unify these, allowing users to request text, images, or video from a single interface.

Should You Care Right Now?

If you’re feeling overwhelmed by the constant stream of AI news, you’re not alone. It’s easy to get lost trying to keep up with every leak and announcement.

- For Enterprise Users: Keep an eye on Gemini Enterprise. If Gemini 3.0 launches and lives up to expectations, the workspace integration could save significant time.

- For Developers: Watch the API documentation. If Gemini Beta 3.0 Pro becomes available, experimenting early could give you an edge.

- For Content Creators/Marketers: The multimodal features are where the real value might lie. If VEO and Nano Banana are integrated well, you could potentially replace multiple tools with a single AI assistant.

- For General Users: GPT-5 and Claude are already excellent. Gemini 2.5 is also very capable. A 3.0 upgrade might offer improvements, but it’s unlikely to be a life-changing difference overnight. Wait for the official release and try it out when it’s available.

Ultimately, while the October 22nd date is plausible, Google could announce it or stay silent. Therefore, for now, it’s best not to drastically change your workflow. Wait for official confirmation and test it yourself.

Comparison Table (At a Glance)

| Area | Gemini 3.0 + VEO 3.1 (expected) | GPT-5 (current) |

| Agent Actions | Native in Workspace (rumored) | Mature but tool-dependent |

| Multimodal Video | VEO 3.1 tie-in | Strong via ecosystem |

| Context Handling | Very large windows (rumored) | Large, practical contexts |

Conclusion

Ultimately, Gemini AI Embedding Model 3.0 + VEO 3.1 looks plausible—and strategically timed—but it’s still unconfirmed. Therefore, treat the leak as a signal to prepare, not to pivot. In short, keep your current stack stable, monitor official announcements, and line up quick wins so you can move the moment details land. Meanwhile, use VidAU AI to repurpose updates from this article into short explainer clips, ensuring fast, consistent messaging across channels.

Frequently Asked Questions

1.Is Gemini AI Embedding Model 3.0 + VEO 3.1 officially released?

Not yet. Signals look credible, but there’s no formal announcement. Treat it as plausible, not confirmed.

2. What’s the difference between Gemini 3.0 and VEO 3.1?

Gemini 3.0 refers to the core AI family (reasoning, agents, embeddings). VEO 3.1 focuses on video generation/understanding and likely plugs into Gemini for end-to-end multimodal.

3. How does Gemini 3.0 compare to GPT-5 right now?

Expected: stronger Workspace-native agent actions and tight video workflows via VEO; GPT-5 remains excellent for broad reasoning and tooling. Benchmark both in your stack when official.

4. What exactly is the “Embedding Model” part?

Embeddings convert text (and potentially other modalities) into vectors for search, RAG, classification, and personalization. A 3.0 upgrade likely boosts retrieval quality, clustering, and long-context grounding.

5. Will Gemini 3.0 be a single model or a family?

A family: Pro/Flash/Compute for different latency/throughput needs, Nano on-device, plus media models like VEO (video) integrated for multimodal tasks.

6. What agent capabilities should I expect?

Action-taking in Google Workspace (e.g., draft/send emails, update Sheets, schedule Calendar, summarize Docs) with tool use and policies. Confirm details at launch.